BASIC IDEA

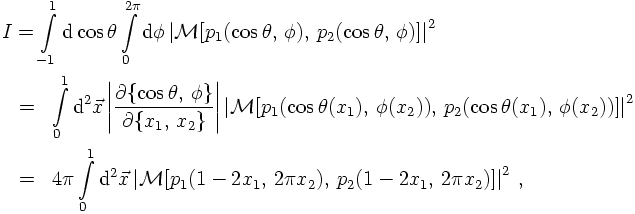

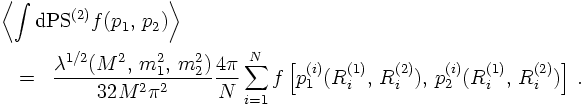

In the previous sections, higher dimensional phase space integrals for processes with n outgoing particles have been evaluated analytically. However, in so doing, the effect of amplitudes squared has been totally neglected (implicitly it was set to 1, to phrase it differently). In practical applications, this function of the four-momenta of course has to be included into the integration, rendering it a difficult task. In fact, in many practical cases, especially if n becomes larger than three or if non-trivial cuts on the phase space have to be taken into account, an analytical solution for the integral is impossible and numerical methods have to be applied. Due to the high dimensionality of the phase space standard textbook methods such as simple quadratures become prohibitively time consuming and different methods suitable for high-dimensional integration have to be devised. One of these methods is called Monte-Carlo integration. The basic idea here is the following:Take a statistically significant sample of the function, by evaluating it at a large number of points. The average of this sample provides an estimator <I> of the function. Writing the phase space volume as an n-dimensional hypercube with dimension 1, this amounts to replacing

TRUE VALUE, ESTIMATED VALUE, CONFIDENCE AND CONVERGENCE

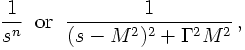

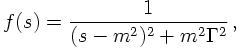

In principle, in the limit of N going to infinity the estimated value <I> of the integral should approach its true value I. This limit, however, can not be reached in practise. Therefore, it is a priori not clear, how good the estimate describes reality. In fact, during numerical integration, i.e. during the sampling, <I> will in all practical cases fluctuate.Sometimes, these fluctuations will be severe oscillations with large factors between estimators taken after different numbers N of sampling points. This is especially true, if the integrand (the function) itself is a wildly fluctuating function, spanning orders of magnitude, maybe, to make things even worse, with extremely sharp peaks. Such peaks can clearly be missed quite easily for a long time while sampling, in the very moment one or more of them contribute to the result, the corresponding estimator will change accordingly. Examples for such fluctuating function that occur frequently in the evaluation of cross sections are connected to "propagator terms" and have the form

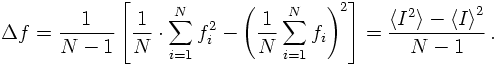

Now it is time to employ a measure from statistics to judge the size of the fluctuations relative to the average, namely the variance. In so doing, some implicit assumptions have been made: first of all, it is assumed that the sample, i.e. N, is large enough to render statistical methods a valid way; second, the sampling points have been taken independently from each other. Then the variance is a good way to estimate the error range of the estimator. To employ this concept in such Monte-Carlo integrations, therefore not only the function has to be sampled but also its square. The variance Δf is then given as

From this it is apparent why for large dimensions the Monte Carlo integration technique is superior to quadratures: The error scales like the inverse squareroot of N, a much better behaviour for the error estimator than what could be gained with quadratures.

This behaviour is exemplified in a test integration.

The reasoning above implies that for a Monte-Carlo integrations the name of the game is to minimise σ as quickly as possible in order to achieve a reliable estimate for the true value of the integral. It is obvious that for wildly fluctuating functions this may be a real problem, solutions in such cases are sometimes far from being trivial.

BASIC MAPPINGS

Decays:

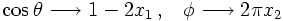

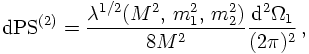

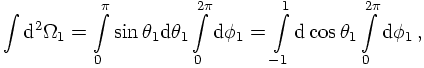

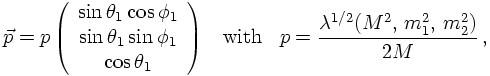

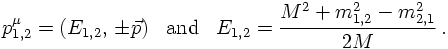

Consider first a simple two-body decay, filling a two-particle phase space. From the reasoning above, it should be clear that two angles suffice to describe this phase space element, namely cosθ and φ. The Jacobian for this is just a factor of 4π. However, let's try to be a bit more preicese here. To do so, let's assume the decaying particle has rest mass M, i.e., P² = M², and the two decay products have masses m1 and m2, respectively. Then, in the restframe of P, the two-body phase space integral can be written as

Propagators:

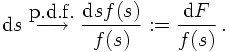

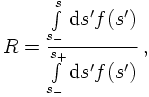

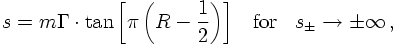

The next example is a bit more involved, and its importance will become obvious later. However, here's a way trying to explain its significance. Assume that in some reaction an unstable particle is produced and decays further. Due to its limited lifetime, its rest energy (i.e., its mass) are not completely fixed but smeared out. Usually, the mass squared of such an unstable particle, s, is taken to be distributed according to a Breit-Wigner function,

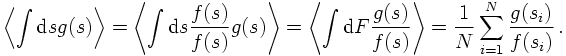

This looks very good: If the integral is over an ill-behaved function g(s), which diverges in the Monte Carlo sense, this behavious may be attenuated by introducing a p.d.f. f(s), which has a similar divergence structure. Due to the ratio in the sampling, the fluctuations then cancel out, leading to a much smoother behaviour of the integrand. However, this has to be taken with a grain of salt: In such a case, the integral over the p.d.f. must be performed analytically and the resulting function must be invertible. Clearly, in most cases which deserve numerical integration, the "true" function g(s) is simpler than the p.d.f. - otherwise there would be no need to integrate numerically.

The impact of including suitable p.d.f.'s for some simple cases is exemplified here.