In contrast to the lectures before, this lecture will centre around technical rather than physical problems, namely the solution of equations of the type

for x, and the evaluation of integrals,

both potentially also in more than one dimension.

This lecture follows the discussion in Giordano & Nakanishi, appendices B, section 1, and E, sections 1, 2, and 4.

We first address the issue of finding the roots of the equation f(x)=0. We notice that this may help to find extremal values of an equation g(x), if dg(x)/dx = f(x), a typical optimisation problem. Let us first consider a case where we also know the first derivative of f, f'. In this case the method of choice is the Newton-Raphson method. It is an iterative method, based on truncating the Taylor series of f(x) after the first term. Let the unknown true root be X, which is estimated by xi. Defining Δxi = xi-X and Taylor expanding f(X) around xi, we have

leading to an improved estimate

This is a better estimate of X if Δxi is small enough. Although this approximation stems from a first-order truncation, the errors Δxi may decrease very rapidly if the function does not fluctuate to wildly. To see this, rewrite the equation above as

Re-expanding this in a Taylor series then yields the following result:

In other words: The size of the error decreases quadratically with each iteration step. Therefore, the order of convergence of this method is 2. If f(x) is sufficiently smooth or if the initial estimate, x0, is close enough to X, the convergence will be fast, but there are cases where this method does not converge at all.

How can this be used in cases where no information concerning the first derivative is available? The answer is to use the function itself to obtain an estimate for the derivative. Recalling that the derivative is defined through the limit

it is clear that it can be estimated through

Substituting this estimate into the expression for xi+1 yields

The error estimate is now given by

Interestingly, the order of convergence in this case equals the golden mean,

(1+√5)/2, roughly 1.6. This method, sometimes also known as the

secant method has all the shortcomings of the original Newton-Raphson

method, and in addition two initial guesses are necessary.

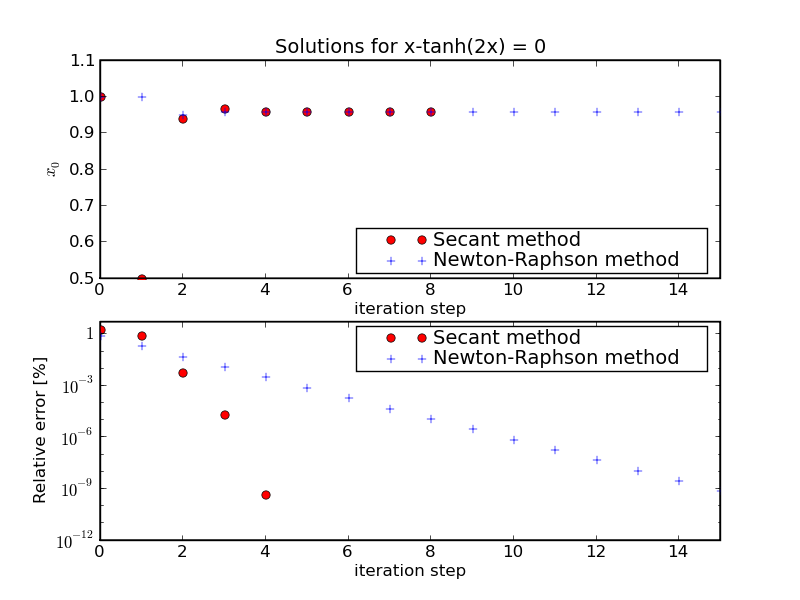

In order to see the differences of the two methods, let us exemplify them

by solving the equation

which we will meet again in lecture 8. Here, a is an essentially free parameter: For a≥1, this equation has two non-trivial solutions, at x=±X, while for a≤1 there is only one solution at x=0. Below we see the results for a = 2 obtained from both methods:

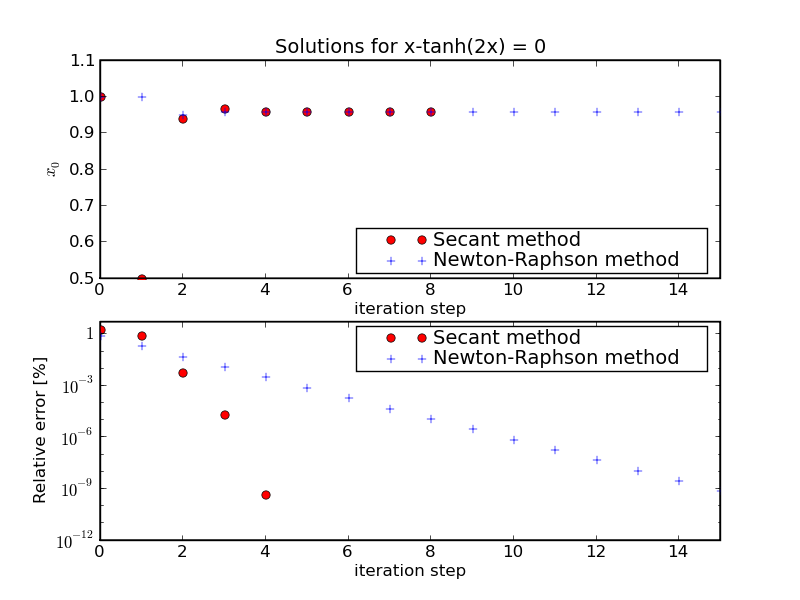

Playing with this code it becomes apparent that the level of convergence, and

the result depend on the initial values: Choosing, for instance, a different

initial value of x0= 0.5 for the Newton-Raphson method yields

the trivial solution x=0.

It is worth noting here that a condition to stop the recursion is given by