Cosmology I

M.Sc. in Particles, Strings & Cosmology

CPT, University of Durham

These are the notes for Cosmology I, the MSc course in Durham’s Centre for Theoretical Physics (CPT) Institute, a joint venture of the Physics and the Mathematics departments in the University of Durham. Here an introduction to the smooth expanding universe is presented and the history of the universe is traced back in time. The basis of the current model of cosmology is laid out and the evidence that lead up to this theory is reviewed. Problems and references are to be found throughout the text. Although we will review all concepts from fundamentals, prior knowledge of general relativity, thermodynamics and statistical mechanics will be useful. As for the mathematics familiarity with differential equations and differential geometry will facilitate the derivation of results.

These notes are meant to be self-contained yet for additional reference the following is the recommended bibliography

-

•

Modern Cosmology Scott Dodelson [5]

-

•

Cosmology notes at Amsterdam University and Nikhef

-

•

An introduction to Modern Cosmology Andrew Liddle [6]

-

•

Cosmology Steven Weinberg [7]

Previous lecturer notes can be found here for reference.

Contents

- 1 The dawn of modern cosmology

- 2 Homogeneous & Isotropic

- 3 Geodesics, Horizons & Redshift

- 4 Einstein’s equations

- 5 The universe we live in

- 6 The universe in equilibrium

- 7 Turning back time

- 8 Boltzmann Equations

- 9 Nucleosynthesis

- 10 Thermal dark matter production

- 11 Inflation

- 12 Cosmology as a field theory

1 The dawn of modern cosmology

A rough picture with the main features of cosmology can be directly drawn from experimental evidence –with a little guidance– by the reader with virtually no knowledge of physics. This picture is an expanding universe where everything we see originated from a primordial hot plasma. This first lecture will provide this little guidance and help ease us into cosmology with barely an equation. At the same time this introduction will serve to stablish the context for more quantitative computations to follow.

Before laying out the evidence that lead to modern cosmology it is pertinent to set the stage, in particular the size of the stage in cosmology. We have known for some time that our world is a rocky planet orbiting 150 million kilometres or a micro parsec (pc km) around a mid-sized, midlife star some 8 kilo pc (kpc) away from the centre of a galaxy of kpc diameter. The nearest neighbouring galaxy, Andromeda, is kpc away and is the largest in our local group of 20 galaxies, placed in the outskirts of the Virgo supercluster of some 30Mpc in size, this super-cluster being one of the many known. The furthest objects we have seen are early stages of galaxies known as quasars some Gpc away like 3C273.

|

|

Cosmology concerns itself with the largest distances today, those above the Mpc, or m. However much the previous paragraph has managed to convey, this scale is hard to grasp; for comparison the exploration of the sub-atomic world has gone roughly (yet coincidentally) as many orders of magnitude below our human scale with the LHC searching the (14TeV)m scale. It is nonetheless good to state the obvious: the ‘ruler’ in cosmology being this large, within its closest tick-marks fall many galaxies and intergalactic dust all of whose internal structure and particulars we ignore to extract instead simple averages. For example we will be interested in how much matter there is in one box of 10 Mpc side regardless of whether this matter consists of stars, dust, planets or carbon-based life forms; this information is lost as details smaller than the pixel size in a photo.

Distances between cosmological objects however were not always the same as we learned as recently as the first half of the last century. Let us then now turn to the first piece of evidence for the present theory of cosmology.

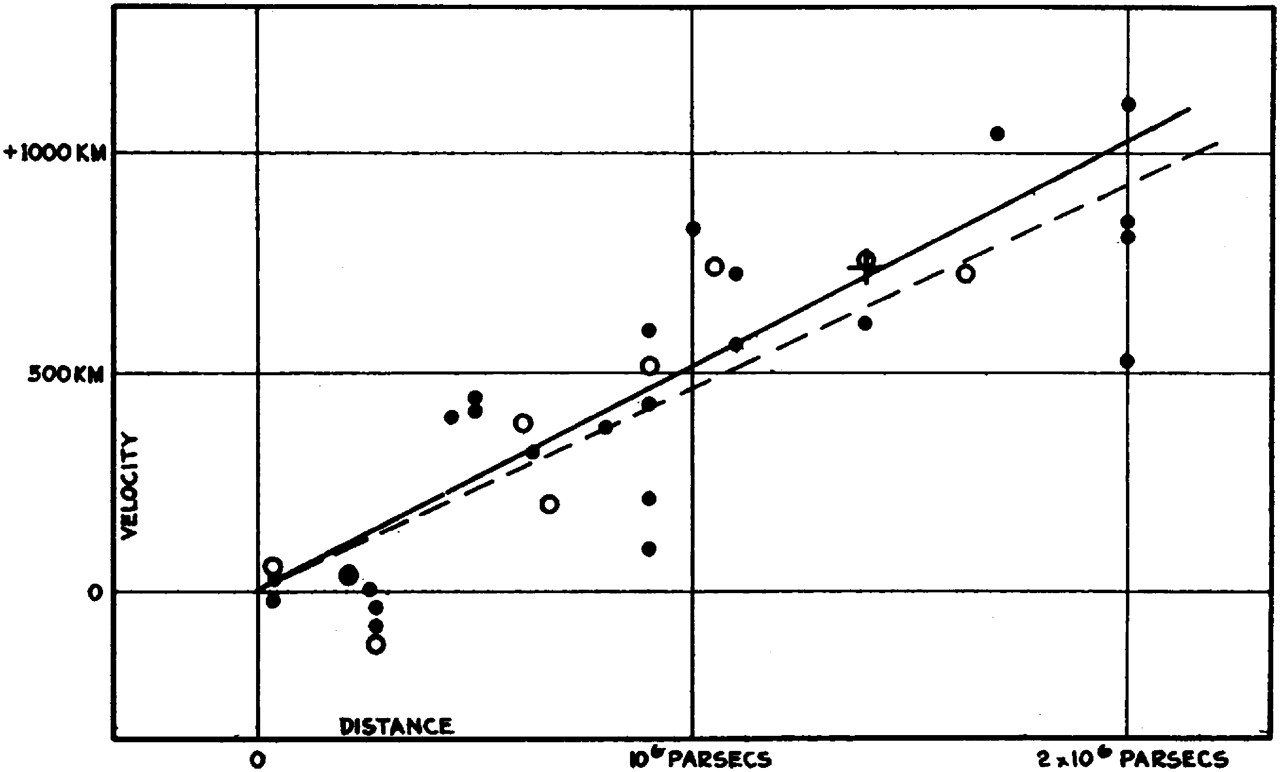

1.1 Hubble Diagram

In 1929 Edwin Hubble put out an article with the distances and relative velocities of a few dozen galaxies he and his assistant Milton Humason had measured combined in a plot that was to make history (fig. 1(c)). These results were however much less simple to obtain than the previous statement would suggest, just like its interpretation into the present model of cosmology took decades to be established.

|

|

When Hubble started his research, these galaxies were not even thought to be so, part of the scientific community believed they were nebulae within the Milky Way, which gives an idea of how little was known about distances at the turn of the twentieth century. The confusion was there for several reasons, for one the poor resolution of the instruments made them appear as cloudy patches in the sky. Indeed one can, in an exceptionally clear night far away from any artificial light, spot Andromeda with the naked eye but it will not look much like a galaxy, just a hazy spot of faint light. This type of objects had been catalogued in the eighteen century when in 1771 Charles Messier published Nebulae and Star Clusters. Its intent was basically to mark the place of these uninteresting objects so they would not get in the way of identifying the more relevant comets that were sought for at the time. There are some hundred objects in this catalogue, which is still used as reference today (Andromeda is Messier 31 or M31 for short) and as we now know, although they might look similar with low resolution, a third of them are galaxies while the rest are actual nebulae.

To find out the distances of these objects, Hubble used the 100 inch telescope at Mt Wilson Observatory in California to observe them each for a prolonged period. In this way he was able to identify Cepheid stars within them. These are stars whose luminosity varies periodically with a frequency known to be tightly correlated with absolute luminosity (the power or how much light the star produces per unit time). By measuring their apparent luminosity, that is how much light reaches us, one can work out how far the star is by how diluted the power seems. In this way Hubble estimated that M31 (Andromeda) was 300 kpc away. The size of the Milky way had just recently been determined by the work of Harlow Shapley around 1920 to be kpc so this result showed that these formations where well outside the Milky way.

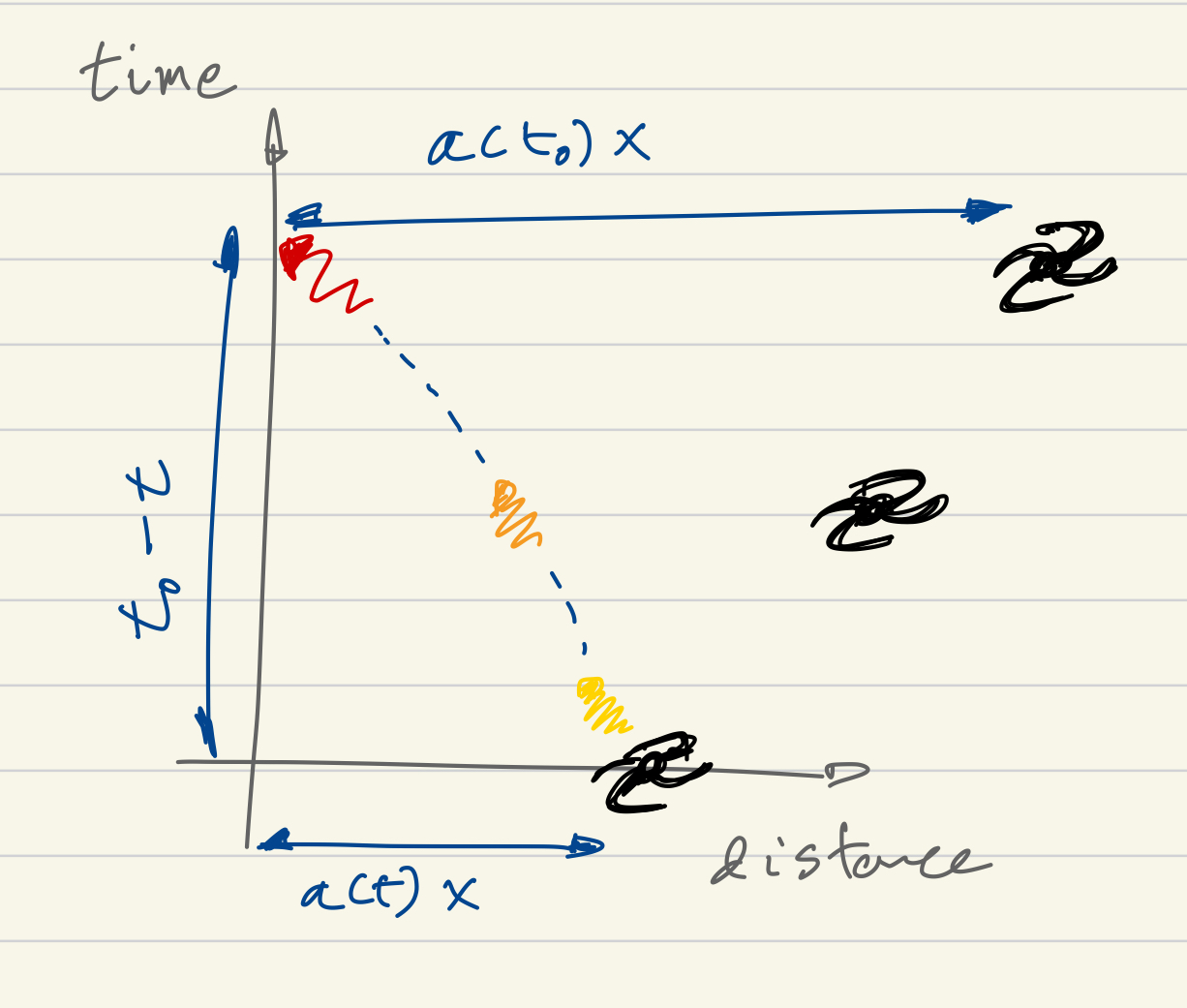

For the other axis of the plot in fig. 1(c) one needs relative velocity to us. In order to find this out one can use the spectrum instead of the intensity of the light reaching us. Spectral emission lines from common elements we can at times compute from first principles as with Hydrogen or simply and to more accuracy measure them here in the laboratory. Then when looking at the spectrum of light from a galaxy one can identify emission lines and hence deduce information about the elements making up its composition. For example we know that the transition between the two hyperfine split 1S states of Hydrogen has a wavelength of 21 cm. These spectral lines however do not sit exactly on top of the ones we measure here on earth but are displaced up or down. This up or down is actually given a name, if the line appears of higher frequency (smaller wavelength) we say it is blue shifted whereas if is of lower frequency (longer wavelength) it is red shifted. As you might have guessed the naming comes from the visible spectrum of light and its ultraviolet and infrared limits.

The reason for this shift is the relative velocity to us in an phenomenon dubbed Doppler effect. This is the effect that makes ambulances approaching sound at a higher pitch than those going away. In astronomy Vesto Slypher had already made use of this effect to estimate distances and Hubble employed the same methodology. The relation between the spectrum displacement and the relative velocity is given as

| (1.1) |

where is called the redshift and the last equality holds in the non-relativistic limit, where all the data that Hubble took falls in. Finally with these two sets of data Hubble put together the plot of fig. 1(c). It is not perhaps a very convincing linear correlation yet the present day version of the plot to the right 1(d) should convince the sceptics.

The slope of the linear correlation is called the Hubble rate with a 0 subindex to mark it is measured today, again because it was not the same in the past, numerically it is parametrized as

| (1.2) |

where we took the value for from [2] in the second line but given that this value differs notably when taken from other sources it is common leave unspecified (to avoid confusion with Planck constant here we will only use ). This linear correlation tells us that velocity is proportional to distance i.e. that galaxies are receding from us and faster the furthest away. We will elaborate on the simple consequences of this in the end of this lecture, for now let’s review the other main piece of evidence for modern cosmology.

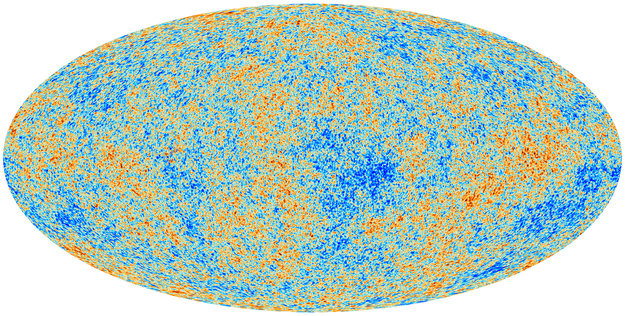

1.2 Cosmic Microwave Background

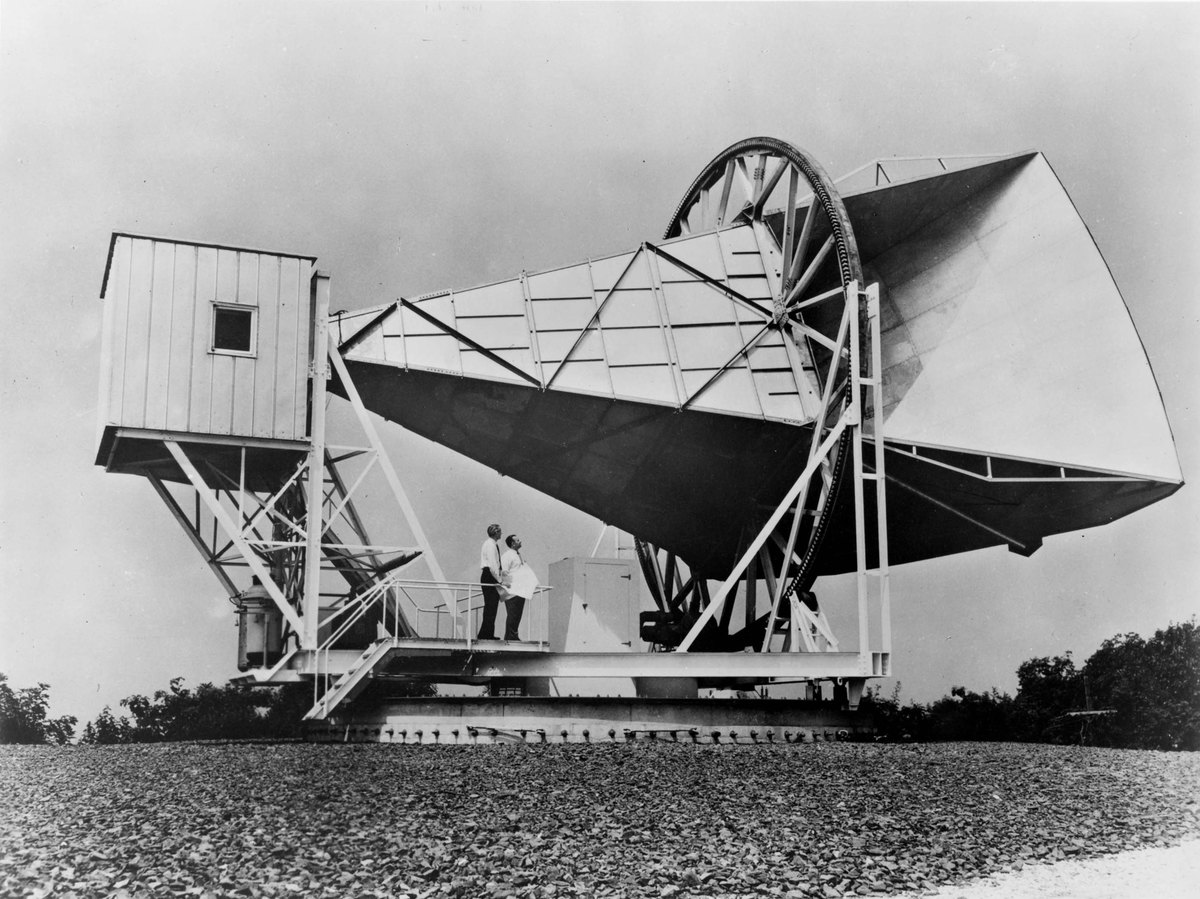

Back in the 1960s Arno Penzias and Robert Wilson were given access to a giant horn antenna designed to detect microwaves at Bell Telephone Laboratory. The original purpose of the antenna was to receive radiation from the Echo satellite in an early commercial test of satellite communications. The project was however done by 1964 and Penzias and Wilson were given control of the antenna to study cosmic microwave light. Microwave light has a wavelength from around milimeter to meter size and Penzias and Wilson started looking at the wavelengths around 7 cm . As in any experiment one of the first steps is to study systematic errors in the apparatus. There was a background noise getting in the way of their experiment which they tried to pin down for a year. They tested for this noise coming from the electronics of the equipment by cooling it, for radiation in the atmosphere by pointing the antenna in different directions, and even evacuated nesting pigeons and cleaned their mess. None of these seemed to account for this static, this noise, yet at least this revealed the noise was isotropic, i.e. coming with equal intensity from all directions in the sky and hence likely of extra-galactic origin.

Still they hesitated to put out their results up until a fateful phone call. In conversation with a colleague and when asked about how his work was going with the antenna Penzias replied that everything was well except for part of the results they did not understand. His colleague then recalled that he had been told by another colleague of a talk given by P. J. Peebles about some cosmic radiation coming from the early stages of the universe evolution and by now redshifted into the microwave range. The prediction actually predates the work of Peebles and his colleague Dicke and was first posed by Ralph Alpher and Robert Herman in 1948 although the former did not know about the latter.

Penzias and Wilson got in contact with Peebles and two papers were produced and sent to the Astrophysical Journal, one by Penzias and Wilson presenting the results (but cautious not to stipulate on the cause of the noise other than a mention to a companion paper) and another by Peeble, Dicke, Roll, and Wilkinson interpreting the result in terms of the cosmological big bang theory. We today call this isotropic cosmic noise the microwave Cosmic Microwave Background (CMB) and is one of the pillars of observational cosmology.

|

|

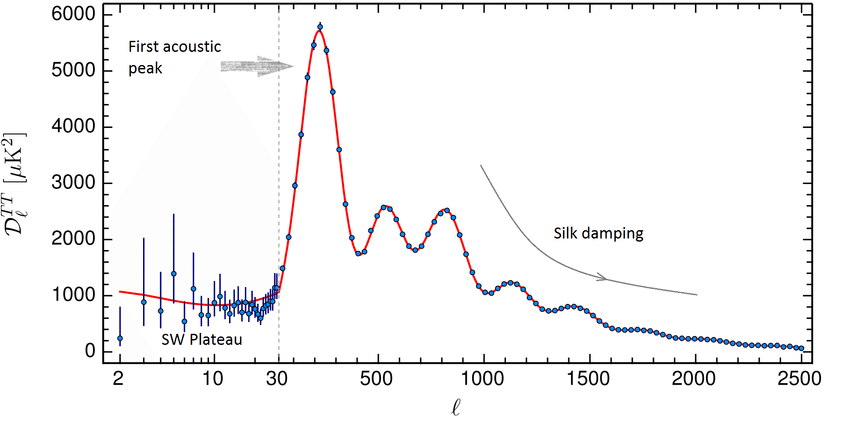

There is a wealth of data to be found in the CMB, for now what is relevant to underline is the shape of this spectrum. This can be seen in fig. 2(b) where experimental data is superimposed with prediction. The prediction is that of black body radiation, the shame shape that one would get on the inside of a hermetic furnace in equilibrium. The distinctive feature of this type of radiation is that the shape depends only on one parameter, the temperature . In particular the expression for the intensity as a function of radiation frequency reads

| (1.3) |

where is Boltzmann constant, eV/K with K degree Kelvin and Plancks reduced constant eVs. The fit is staggeringly accurate as shown in fig. 2(b); it is in fact the most ideal black body radiation we have ever observed. The associated temperature that one can extract from this plot is

| CMB temperature today | (1.4) |

1.3 The early universe

Let us finally put together these two experimental findings. First from Hubble’s discovery one has that if at cosmic distances everything is moving away from us, things used to be much closer and we can estimate when.

Take any galaxy at a distance from us, one has, naively extrapolating its velocity to be the same in time, that if it is moving away at a velocity , it used to be next to us some time ago

| (1.5) |

where in the second equality we have just substituted in Hubble’s law. The distance, the one label that the galaxy we chose had, drops out of the equation; this means that this time is the same for any galaxy. Roughly all of the known cosmos used to be clumped together a time ago! What is this time if we convert (1.2) into years? The current estimate for the age of the universe is 14 thousand million years, how does it compare with the naive estimate?

If one takes this conclusion further, when all the matter in the known universe was compressed together the energy per unit volume of the system must have been exceedingly large, just as a gas heats up when compressed. In fact if the universe had been hot enough, atoms would have ‘melted’, the electrons would be stripped away from the nucleus and for higher temperatures nuclei would have dissociated into neutrons and protons. Implicit in this discussion of a hot universe there is of course temperature.

Well we have already talked about temperature, but in the context of the microwave radiation discovered by Penzias and Wilson. Although this talk of temperature was simply used to characterize the radiation, it probed to be a temperature that we can trace back to this hot and dense initial universe; whereas electrons and nucleons have undergone processing since the early universe to appear as intergalactic gas or galaxies themselves, the light from this early universe has travelled undisturbed to reach us today. Not only that but the fact that the spectrum follows so closely the shape of a black body tells us that the early universe was in nearly perfect equilibrium.

These are the two basic concepts that thread through most of cosmology and whose balance accounts for much of the events in cosmological history: (i) the universe is expanding (ii) in its early stage it used to be in thermal equilibrium with temperature .

Problems

I Let’s do some units conversion; from next chapter on, we will use natural units in our formulae to reduce the number of letters in them. However we will want to restore powers of these constants to express final quantities in e.g. cm, years or Kelvin. So inserting powers of calculate:

| in years | in parsecs | in eV | (1.6) |

II Deduce Doppler’s shift for the relativistic case to find

| (1.7) |

[Hint: In the emitter frame the ‘crests’ of the wave are emitted every seconds and so a distance apart (= wavelength) , convert this time interval to the observer frame to find the distance between crests she sees]. Looking at data on in fig. 2(a), is the non-relativistic approximation justified? On fig. 2(b), looking at the horizontal axis is it still?

2 Homogeneous & Isotropic

The Hubble diagram did not break down galaxies by where in the sky they were located; whether the galaxy one is looking at is in the direction of Cassiopeia or in the vicinity of the Southern Cross, they all follow the same law for their velocity vs distance. Furthermore one would roughly find the same number of galaxies looking into a fixed-size solid angle in any part of the sky. This is to say that the universe seems isotropic, the same in all directions at cosmological scales. It is also homogenous on these scales.

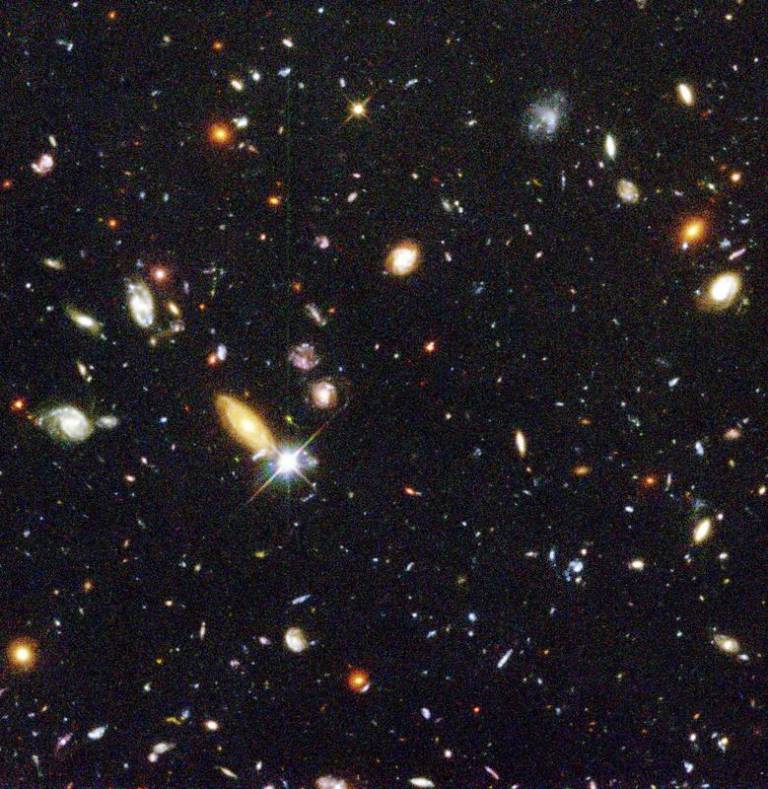

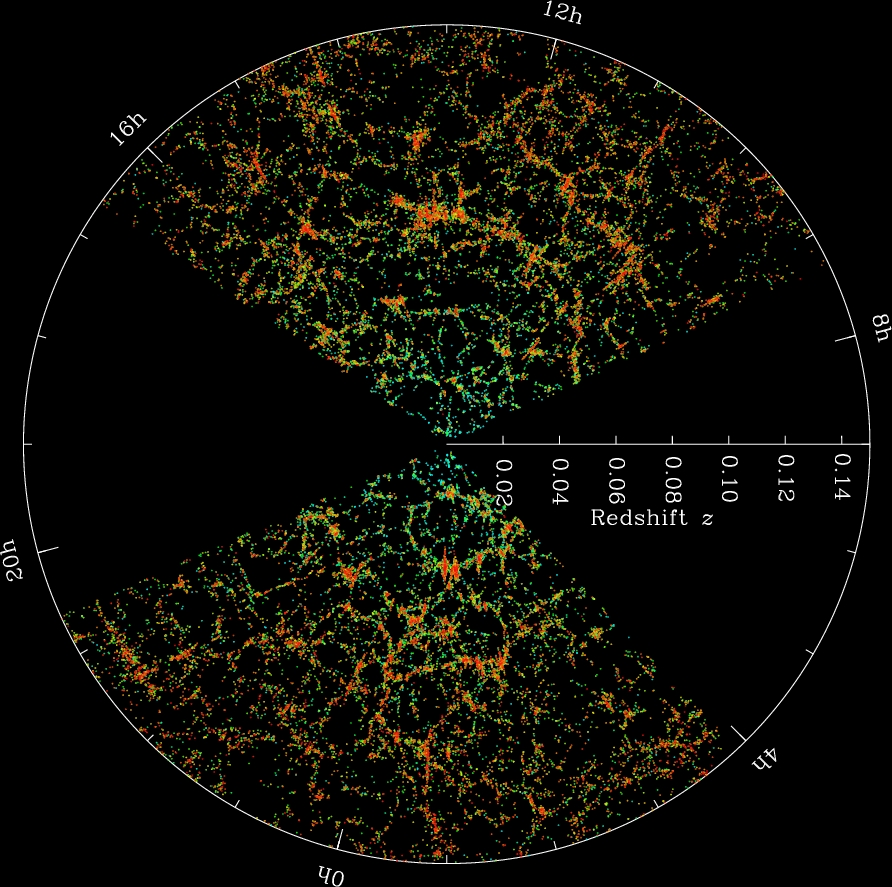

A theory argument to arrive at an homogeneous universe from an isotropic one is to assume our place in it is not special (a.k.a. cosmological principle); if a universe looks the same from any point of reference it must be homogeneous too. One need not rely on theory however, measuring distances of galaxies from us and their angular coordinates in the sky we can create a map, which is what galaxy surveys do. The size of this ‘census’ is quite formidable, as an example the Sloan Digital Sky Survey has mapped nearly a million galaxies and figure 1(a) shows a 2d slice of the map that the experiment produced.

This homogeneity and isotropy seems counter-intuitive from the human or even astrophysical perspective; right where we are there seems to be a lot of matter around whereas if one goes a few tenths of thousand light years out there is comparatively nothing. The answer to this is two-fold. First one should remember what our ruler is in cosmology; consider water, it does seem to us humans homogeneous yet we know at a microscopic level it is not a continuum but it is made of molecules and space between them. Similarly with cosmology there are as many galaxies in a few dozen megaparsec-side box around us as in the box next to it. Secondly what we ‘see’ is far from all there is; as the dynamics will reveal in two lectures time, the weight of galaxies is negligible, a mere of the total energy density. Even within visible matter, galaxies constitute of the whole with the majority being intergalactic dust. The use we will find for galaxies is as probes into the cosmological expansion (tracers) with negligible effect (back-reaction) on the dynamics.

Before moving on to codify this homogeneity and isotropy mathematically, it is worth pondering for a minute the fact that the universe is so ‘simple’ at the largest scale when it needed not be so. It is indeed a clue to early-time dynamics, but we do not yet have the tools to explore this. Let us then for now embrace this happy circumstance that will simplify the mathematics for us.

2.1 The metric

The previous lecture gave the evidence for an expanding universe where, we have just learned, no point is special. Therefore the distance between any two given points, let us take them to be two distant galaxies, will grow with time. We describe this effect by introducing the scale factor as

| (2.1) |

where is the physical distance, with , and we have used to ‘factor out’ time from a space like variable . There is some ambiguity in this splitting of distance into and as happens when one defines a physical quantity in terms of two constructs, so let us for practical purposes normalize. We take to be physical distances as measured today ()

| (2.2) |

where the zero index denotes present time, for reference thousand million years. Lastly to implement the rate of expansion as measured in the Hubble law one has

| (2.3) |

This gives in terms of the scale factor and presents us with the Hubble rate as the growth relative to the current size. In other words the inverse of gives the time scale that takes for the universe to double in size. You can go back to eq. (1.2) for the value of Hubble today and check how much time this would take. The value of together with the convention eq. (2.2) gives the scale factor and its first derivative today, these are the final (rather than initial in this case) conditions that we will later evolve back in time with the dynamics of general relativity.

The exercise above has informally set a coordinate system, convenient to describe inertial observers caught in the expansion; a 4-dimensional trajectory in of one such observer is Mpc). One need then only specify the ‘label’ which stays constant (and remeber corresponds to physical distance today) whilst all such observers would agree on the time coordinate, defining a common ‘clock’ ticking at the same rate. This coordinate system can of course be used to describe any other trajectory and indeed not all galaxies adjust exactly to the Hubble law; Andromeda is moving towards us so we would have . We call co-moving coordinate and we say Andromeda has co-moving velocity. We will nonetheless neglect this type of effect in the following.

The system above is useful for its concreteness while capturing the experimental evidence yet it is important to realize it is a conventional choice. One could multiply by inverse factors and , and the physical distance would stay the same, or one could prefer a boosted frame or change to a different time variable. These would all be different ways to describe the same system, the same physics on which every observer should agree. Although this statement sounds just as sensible as it sounds vague it does have a mathematical rewriting based on invariants. Invariants are observables whose measure would yield the same value for every observer. The first instance of this we encounter is related to space-time distance, the 4-dimensional line element11 1 We set the speed of light to 1, :

| (2.4) |

with being a differential, the infinitesimal version of , and is the metric, the link between our coordinate system and physical space-time distances. Already above one has a change of variables; albeit a familiar one, it does serve to illustrate that what we call the metric is different in each frame

| (2.5) | ||||||

| (2.6) |

The connection with distances as we have discussed them initially can be obtained as the difference of two space-time points , .

Curvature

Thus far, for the sake of introducing one concept at a time, we have kept an implicit euclidean geometry in the 3 space coordinates.

This need not be the case and the differential geometry language of metrics allows for a simple implementation of deviations from the ‘flat’ case.

Indeed the metric could be a function of space and not only time. It cannot be however an arbitrary function of space given the requirement of homogeneity and isotropy; if space is not flat and has some curvature it must be the same everywhere and in every direction. One such geometric object is the sphere (although the one we can visualize is two dimensional), so to incorporate the effects of curvature into a metric let us first contemplate the case of positive curvature or spherical geometry. This is perhaps easiest if one goes to a one-extra-dimension Euclidean space and defines our space as a hyper-surface. This means a metric and constraint as:

| (2.7) |

where is an artificial extra dimension that we promptly dispose of by solving for as and substituting back in the metric to obtain

| (2.8) |

with . One has therefore that distances in the direction are as in flat space, yet the direction has an -dependent factor. Despite the appearances the point is in no way special and has the same curvature as any other.

The same exercise can be repeated for negative curvature with a Minkowskian metric

| (2.9) |

to obtain instead

| (2.10) |

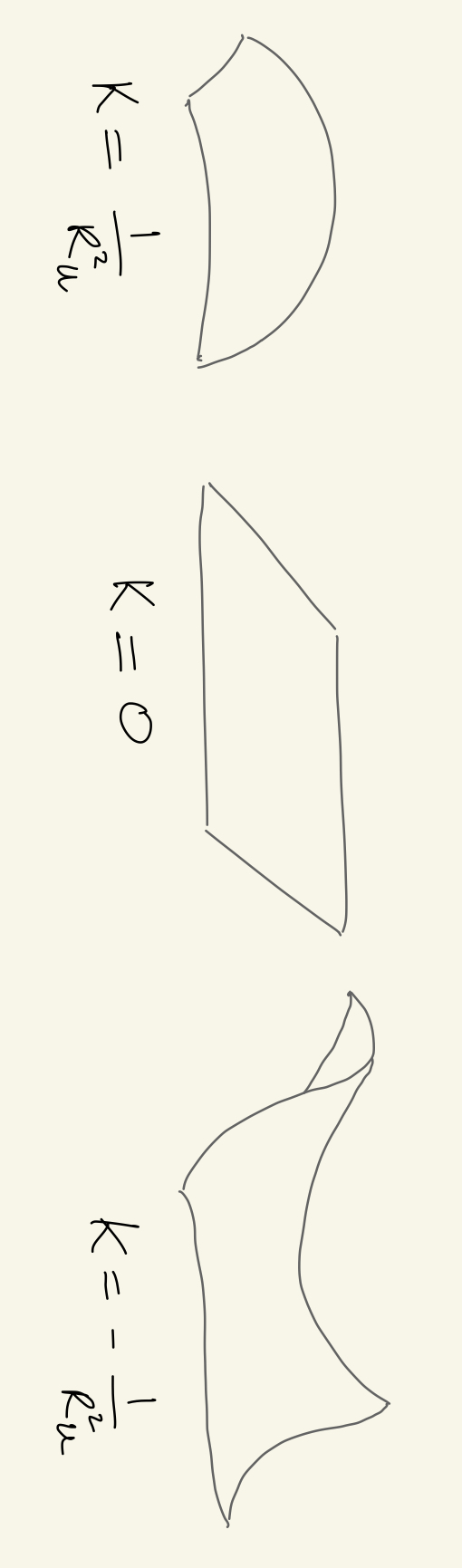

Although the math is straight forward and a natural extension of the positive curvature case, the non-positive metric makes this case harder to visualize. Standard 2 dimensional surfaces to convey the three possibilities for curvature are sketched in fig. 5.

The previous results can be synthesized in the line elements

| (2.11) |

the three cases are referred to as positive curvature or closed universe , flat universe , and negative curvature or open universe . In order to extend the co-moving distance concept to the curved case we perform a change of variables from to as

| (2.12) |

In this way co-moving distance and physical distance keeps the linear relation so now reads . Also note that the limit returns a flat geometry as it should.

This metric, known as Friedman Robertson Walker, is the most general one adhering to the homogeneity and isotropy assumptions and is described by one function of time and a constant for the curvature of 3 space. Simple as it might seem it is worth spending some time familiarizing oneself with it which is what we do next.

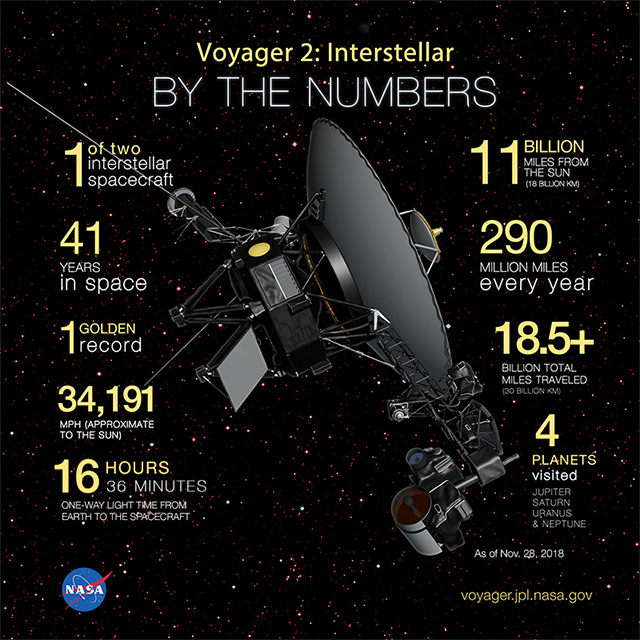

2.2 The path of light

How does one turn observations into data in our coordinate system? First note the rather obvious yet unavoidable fact that we are very much stuck in the same place for cosmological observations; even the further distances we have sent missions to are negligible in cosmology22 2 The Voyager mission is Mpc from us.. We therefore rely on information reaching earth from far away. The information available at present comes overwhelmingly in the form of light, yet we have also ‘seen’ the cosmos through neutrinos and more recently gravitational waves. All these are the fastest means possible of transmission since photons and gravitons travel at the speed of light and neutrinos nearly so. Let us then examine how waves of massless particles, be it light or gravity waves, propagate in our coordinate system.

The statement of the speed of light being the same for all observers translates into the defining equation for the invariant line element of the trajectory of light:

| (2.13) |

Let us for simplicity assume a radial ray of light in the coordinate system of eq. (2.12) to write it explicitly as

| (2.14) |

With this much input and without solving for one can already ask questions; the first one we pose is how far has light emitted some time ago travelled to reach us? The answer is

| (2.15) |

where we momentarily restored the speed of light in our equation. The result is then that light travels a physical distance equal to the time interval in our frame (times the speed of light). If we ask instead what is the co-moving distance (equal to physical distance today) to the object that emitted that light we find

| (2.16) |

which one cannot compute unless we are given the form of .

What we can conclude nonetheless, if the universe has been expanding ever since , is that one distance is greater than the other . This is simple to understand; light takes some time to travel, at the time when light was emitted the universe was smaller and the emitter is farther today than it was at time . This is also the case in Minkowski space-time for inertial observers moving away from each other. Nevertheless our case is not Minkowski and it should differ from it. This is relevant to realize because one could naively picture the expansion of the universe as an ‘explosion’ where fragments are moving away from each other but in otherwise an special relativity background. If this were true the distance light would have travelled is simply the distance of the object from us at the time of emission. This distance at time one can obtain from the co-moving distance by rescaling with . It then follows (given a monotonically increasing ) :

| (2.17) |

with . That is, from the first inequality (distance at the time of emission) (distance travelled) . Space itself has expanded in the intervening time !

2.3 Measuring distance

As outlined in the previous lecture there are a number of methods for determining distances in cosmology, our coordinate system allows to put together all different measurements into coordinates and compare them. We next outline two methods of distance determination and what co-moving coordinates they correspond to.

Luminosity distance. Some astrophysical objects have a known luminosity (energy emitted per unit time in the form of light), which one can compare with how much energy in radiation reaches the area of our detector per unit time.

The energy emitted will dilute into a shell around the emission point of radius, today, . The area of this shell depends on the geometry; a positive geometry will have, for given , an area smaller than the flat case . Exercises for circles instead of spheres with a pen, an orange and peeling will convince the dubious. Conversely the negatively curved case has a larger area than . The area in the curved case can be computed realizing that acts as the polar angle in our 3d sphere and a flat slice though our constant surface has radius in the hyper-plane. The three possibilities read:

| (2.18) |

In addition to this effect one has that the rate at which one receives photons is dilated with respect to the rate of emission whereas the energy of an individual photon, proportional to the frequency, also diminishes with the expansion of the universe. This last point we will demonstrate explicitly in the next chapter; intuitively it is a consequence of the stretching of space and the energy of a photon being inversely proportional to the wavelength . All in all the flux we observe on earth related to the luminosity is

| (2.19) |

where we have defined the luminosity distance as it is conventional.

This equation which can be inverted to find .

One has then that the same star seems dimer in an open universe and brighter in a closed one.

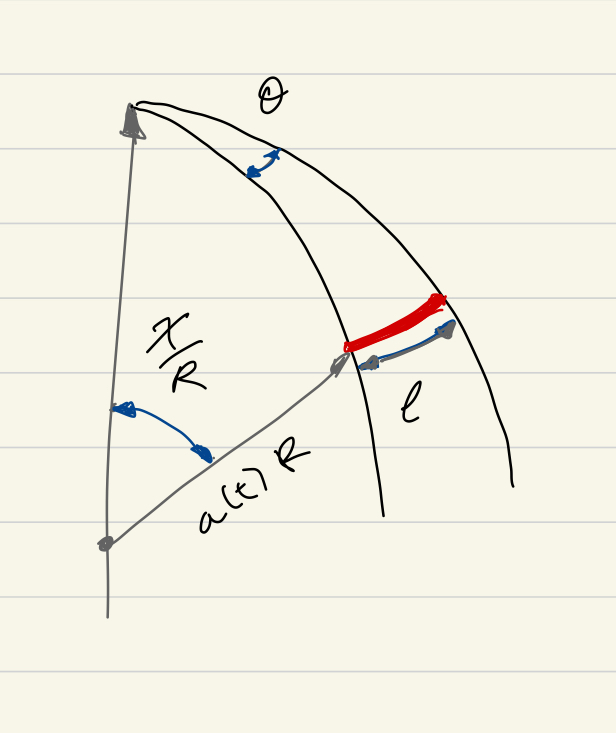

Angular distance. Another possibility is looking at objects whose dimensions are known together with trigonometry. Note that angles do not change in time for an homogeneously and isotropically expanding universe but they stay the same. For simplicity consider a unidimensional rod of length laid perpendicular to the line of sight at a distance much greater than its size so that the angle in radians.

Tracing time back to when the light reaching us was emitted, the object was at a distance (co-moving distance scaled back). The relation to the length is then given by trigonometry in curved space. For the flat case a simple sketch leads to the relation . The positive curvature case takes a bit more sketching, although the derivation for luminosity distance above offers clues. The co-moving coordinate over gives the polar angle whose sine gives the distance to the axis at . This is sketched in fig. 7 and leads to the relation . This generalizes to the expression

| (2.20) |

One has then that the same object at the same distance from subtends and angle

| (2.21) |

That means, since objects seem larger (larger ) in a positive curvature space and smaller in a negative curvature one. To get a sense of why this is the case intuitively you can think of a sphere with the observer in the north pole and the object south of the equator.

If one knows both the luminosity and dimensions of an object, extracting from both methods above provides a useful consistency check. Let us collect here both definitions

| (2.22) |

where itself can be written as a function of the emission time as in eq. (2.16)

Problems

I The change of variables from equation (2.11) to (2.12) satisfies, by comparing the two equations,

| (2.23) |

Solve this relation with for the three values of . How is the expression you found related to ?

|

|

II Given the scale factor compute the physical distance light travels from to (2.15), the co-moving distance (2.16) and distance at emission . Is relation 2.2 satisfied? You can check by taking for instance.

III Use the two voyager missions and the earth for a determination of the universe geometry via trigonometry. Assume the two missions are the same distance away from us today using data on the figure (careful this is NASA so billion=), the line-of-sight of the two form a relative angle as seen from earth whereas on board the angle between earth and the other spacecraft is , and take a spherical geometry for your computations. Determine in terms of to be:

| (2.24) |

Expand on and assuming we measure angles with degree accuracy, find a bound on .

3 Geodesics, Horizons & Redshift

The previous lecture laid out the metric used in cosmology and connected it to observations. In particular the scale factor measuring the growth of the universe has a first derivative related to the Hubble rate as today and we set so that co-moving distance is present time physical distance. An additional parameter of the metric accounted for a possible curvature of space, , whose current experimental value is compatible with 0 but we keep general for now.

3.1 Geodesics

Although no reference to gravity has been made so far, the metric incorporates all its effects to the homogenous and isotropic approximation we are taking here. This will be made explicit in the next lecture when the equations of general relativity show how matter sources the dynamics of the metric. For now this section studies the motion of test particles or probes in this background metric.

Since gravity is the single relevant interaction in the cosmological regime today the metric must suffice to determine dynamics. Let us consider a point particle of mass travelling in our FRW universe. As we did with light we describe its 4 dimensional path with one parameter such that the first derivative is the 4-momentum:

| (3.1) |

The path is then given by solving the equation of motion yet no force of gravity is in sight. This is so because in the geometric language of general relativity the gravitational force is in the connection or first derivative of the metric and the equation of motion is the geodesic equation or path of minimum space-time distance. Since this formulation might be unfamiliar to the reader, here first we take a detour to introduce geodesics as describing dynamics.

-

-

:

To ease us into geodesic equations and the Connection let us resort momentarily to flat space and the simple equation for inertial motion i.e. in the absence of any force:

(3.2) where is the Kronecker delta. If one were to describe the system in other set of coordinates ,

(3.3) and rewrite the equations of motion (EoM) in terms of the metric , which can be done by multiplying and summing on and a little trickery

(3.4) we obtain a second derivative term as in the original case plus a term quadratic in first derivatives with a coefficient which we call the connection or Christoffel symbols. This fictitious force has appeared in the process of changing to another set of coordinates or observer frame. The case of gravity itself is not unlike this, one can always find a local frame in which there are no forces (the connection vanishes), a local free falling frame. Nevertheless even such an observer would be able to tell the presence of gravity by the non-vanishing second derivative of the metric through tidal forces.

The dynamics then will be given by the connection or first derivatives of the metric which in our case reads

| (3.5) |

where we have defined for convenience the internal space metric Diag. The definition of the connection one derives from eq. (3.4) is

| (3.6) |

where the upper-indexes metric is the inverse as .

A difference with the derivation above is that time itself is a coordinate and the geodesic equation has 4 components while the first derivative (momentum) is constrained to be on shell as in eq. (3.1). The system to be solved is then

| (3.7) | ||||

| (3.8) |

where we have introduced the covariant derivative and have used the chain rule . The covariant derivative introduction serves more than a compact writing of the geodesic equation, it is the derivative which yields covariantly transforming objects (i.e. tensors) which one in turn needs to build the theory of gravity as the local theory of coordinate transformations. The similarity with gauge theories (as is the case of the Standard Model) which also present a covariant derivative is in fact indicative of a more profound connection between the two, yet this falls outside of our scope.

Relevant for our discussion is the fact that conservation laws which one may be used to with ordinary differentiation in the presence of gravity must be promoted, for example:

| Special | Relativity | General | Relativity | (3.9) | |||

| (3.10) | |||||||

| (3.11) |

where we see that the covariant derivative acts on every index and with a minus relative sign on lower indices:

| (3.12) |

Back to cosmology with our FRW metric differentiation and a little patience yields a connection which can be synthesised as

| (3.13) |

with every other element being 0. One can be more explicit and give the space-like components

| (3.14) | ||||||||

| (3.15) |

where we note that the partial derivatives are actually total derivatives given that the functions they act on depend only on one variable. So that finally we can write the differential equation for our trajectory in (3.1),

| (3.16) |

explicitly by substituting in . Let’s do it for the 0’th component and recalling the notation one finds

| (3.17) |

which can be turned into an equation for the energy alone by using the on-shell condition and the definition of momentum as

| (3.18) |

and substitution back in eq. (3.17)

| (3.19) |

The ultra relativistic () and non-relativistic () limits of this differential equation are simple to solve

| (3.20) | |||||||

| (3.21) |

Hence we have that the energy of massless particles (e.g. light) scales inversely with the expansion of the universe while the energy of slowly moving massive particles stays the same. This result one might derive with considerably less effort just by noting that the energy of a photon is:

| (3.22) |

with and frequency and wavelength respectively. If as stated in the previous lecture, space itself is expanding, wavelengths expand with it as well and this greater wavelength means smaller energy. On the other hand a point particle with mass will have a first order contribution to energy and independent.

3.2 Redshift & Horizons

The fact that the evolution of the energy in light tracks the (inverse of the) expansion of the universe allows for a simple conversion of experimental observations into dating. The first lecture outlined how the shift in the frequency of light described as the Doppler effect can be used to determine relative velocity. We can now relate this shift to the scale factor recalling the definition in eq. (1.1) which now reads:

| (3.23) |

Some of the furthest away objects we have seen are quasars at and so bring information of back when the universe was a seventh of its current size. We can even see further back to higher redshifts, only then the universe did not look anything like now; for one galaxies had not formed!

This brings us to the daring question: how far would we have to look to see the ‘birth’ of the known universe, if such a thing were possible? Recall that we obtained in the first lecture an estimate of the age of the universe by approximating when all the galaxies and everything else we see was ‘in the same place’ which is what we mean by birth. This corresponds to and one would expect this limit to be singular or ill defined yet the question we posed has a finite answer, in comoving distance it is:

| (3.24) |

where even though it does so in general mildly enough so that the integral converges. This distance is called the co-moving horizon today since we believe we have no way of knowing about anything beyond this radius.

However our initial scepticism about this limit is justified, it as a convenient abstraction yet we should not take it literally; the Physical laws that we know can only be taken back in time so much until the conditions of the universe are such that they break down. In the integral above however this regime makes a tiny tiny contribution to the total which is dominated by late times and for all practical purposes we can take the limit.

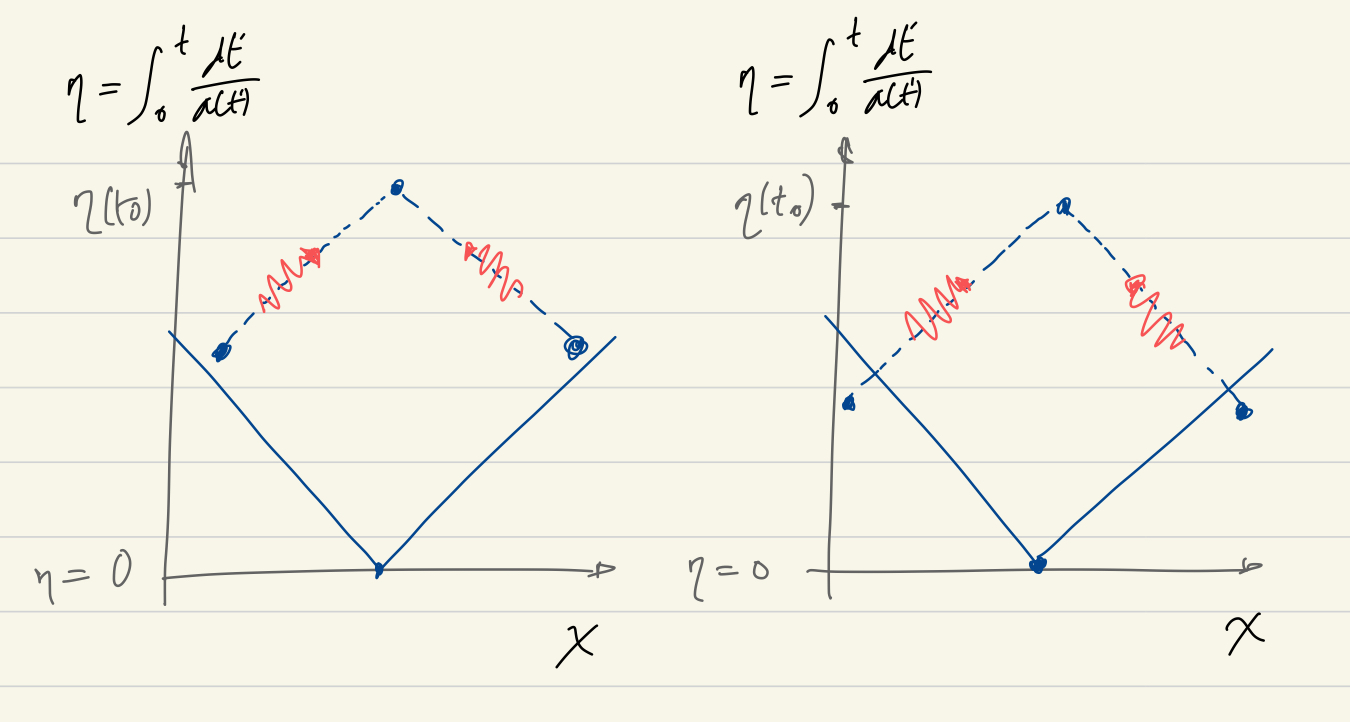

One can make the answer to this question time dependent to introduce what is known as conformal time:

| (3.25) |

this gives the horizon at time , to reiterate, the maximal co-moving distance that information about an event at can travel by time . At the same time it doubles as an alternative time variable replacing .

The use of changing variables once more to this time is that causality is specially transparent to discuss in terms of .

Causality

Consider two events occurring at times and () in two different co-moving coordinate points , let’s say for concreteness event one is a supernova explosion on a distant galaxy and event two is the decision of a scientific committee on whether to build an underground neutrino detector or not here on earth. The fastest that information from the supernova can travel is the speed of light and so by this information has covered a co-moving distance:

| (3.26) |

Assume that the two points are far away enough so that , that is the horizon of event 1 by the time of event 2 is smaller than the distance between them. In this case information about the supernova could have not reached the committe at the time of the decision, we say the two events are causally disconnected; they couldn’t have influenced one another in any way. The light of the supernova will reach the planet later and neutrinos a bit later still, if the detector was built these would provide useful data, but they couldn’t have possibly known at the time! As history has it a number of government agencies around the world took the right decision, even if they did not have supernovas in mind, see SN 1987A.

As a last discussion on causality which will be of use later, let’s consider the possible relation between events A,B in opposite directions in the sky which occurred at time and whose light is just reaching us today on earth. The fact that the light from these events is just reaching us now, if it has travelled unperturbed, means that comoving distance and comoving horizon coincide:

| (3.27) |

(in practice we can pick simultaneous events by selecting equal redshifts). Say now that the two events look strangely similar and so we ask, could they have had a common cause? The mathematical positing of this question is can they be fitted on the same light cone? Fig. 8 tries to depict the possible situations, shifting about the two simultaneous events in the presence of a vertex will convince you that the earliest the two positions could have obtained the same information is if it was emitted from the midpoint at . By the time when the events took place the possible original signal would have covered a diameter of which we can compare with their distance derived from eq. 3.27

| (3.28) |

The rather simple answer we obtain is that events that we see in opposite ends of the sky cannot be causally connected if they occurred in the first half the conformal lifetime of the universe. On the other hand when we say causally connected we mean they could have been, but they need not.

Problems

I Show, given the covariant derivative action on lowered indices that the derivative acting on the invariant object satisfies

| (3.29) |

II Compute the connection elements , for space-curvature-less () metric:

| (3.30) |

Check that any other element is either related by or zero.

III Take a universe with with and compute explicitly conformal time and redshift , . What time and redshift corresponds to ?

IV Turn eq. (3.19) into an differential equation for and solve for with initial conditions . If initially the energy was much above the mass what value of approximately sparates the regions where and ?

V Derive the geodesic equation from the variational principle applied to:

4 Einstein’s equations

Thus far we have taken the metric as a given, a background where we studied the evolution of light and matter, the position of our horizons and the galaxies. The metric is however dynamic and it is affected by what and how much matter is there and perturbations on the metric propagate as light does to first order.

In loose terms the dynamics of the metric will be given by an equation of motion which will contain second order derivatives, just as Maxwell’s equations contain second order derivatives of the gauge potential . These equations of motion will not be arbitrary but admit a covariant form, like the geodesic equation. This means that the equations of motion themselves are in tensor form and obey simple transformation rules which guarantee they look formally the same to all observers.

4.1 Curvature

The covariant second-order derivative that can be built out of the metric is the Riemann tensor defined through the commutator of covariant derivatives:

| (4.1) |

which reads, in terms of the connection

| (4.2) |

The Riemann tensor is antisymmetric under exchange of the last two indices by definition but also under exchange of the first two with lowered indices , symmetric under exchange as and with a vanishing cyclic combination of the last 3 indexes which means that, all in all, the number of independent components is 20.

Contracting two of the indices we obtain a two-index tensor which is called the Ricci tensor:

| (4.3) |

which is symmetric . Finally the Ricci tensor can be reduced to a scalar by contracting the two remaining indices

| (4.4) |

which is an invariant under coordinate transformations.

For the second ingredient in the dynamics, the fact that matter sources gravity would mean the equating of a combination of the tensors above to a tensor describing matter. Rather than trying out different possible tensor structures there is a more direct and restraining route in the use of a variational principle. All the types of fundamental dynamics known to us can be described by a variational principle, which states that the classical solution to the EOM is that which minimizes the action functional . The action functional, for a common description of physics for all observers, is an invariant built out of the metric and matter fields. This still does not say what is this functional form, however if we assume that the EOM are linear in the curvature there is one answer only:

| (4.5) |

where is Newton gravitational constant, in units, GeV, in the matter Lagrangian we have hidden the matter fields, we included a cosmological constant term in and is the covariant measure (you can think of the square root as the Jacobian).

As customary now the EoM for is obtained taking an infinitesimal variation33 3 Unfortunately the opposite convention for the metric means that in Einsteins equations :

| (4.6) |

where we have defined the stress energy tensor as

| (4.7) |

These are the celebrated Einstein field equations relating the curvature of space-time to matter. The curvature dependent piece of the equation is called Einstein tensor which one can compute explicitly with eqs. (4.2-4.4) for our metric

| (4.8) |

with the Connection we computed in eqs. (3.13-3.15). Again calculus and patience yields:

| (4.9) |

so that

| (4.10) |

where we introduced the notation .

4.2 Matter

The stress energy tensor as defined in eq. (4.7) is not very useful to us given we do not know what type of matter there is a priori. To determine the form of the stress energy tensor for different type of matter, the QFT minded physicist would still begin from (4.7) and evaluate it say on a state with a particle of mass . This can certainly be done and the problem section outlines how to, but it is a rather cumbersome road to extract results for the present case.

One can instead start from the implications of homogeneity and isotropy to determine the form of . The homogeneous and isotropic nature of the metric means the curvature is also so, but Einstein field equations relate the curvature to the stress-energy tensor so for consistency it must have the same form

| (4.11) |

where is the pressure and the energy density.

The stress energy tensor’s pressure and energy density are related in the limiting cases of massless and non relativist matter. To derive this relation let us consider a fixed energy isotropic distribution of particles with abundance (number of particles)/(Volume). Then the energy density is simply

| (4.12) |

What about the pressure? It is the force per unit area, so consider a tiny sphere of radius ; the number of particles inside is each with momentum . After some time they would all have left the sphere having carried a total momentum out of . This time would be when the last particles from the center have escaped . Let’s put it all together then in the definition of pressure:

| (4.13) |

Particles in the universe nonetheless do not come in one single energy; assume we are given the distribution (we will later compute it for the equilibrium case) of number of particles per volume per three-momentum . Our approximation of isotropy means this distribution can only depend on the modulus or alternatively energy of the particle so the total number, energy density and pressure read:

| (4.14) |

where we note that these magnitudes read like averages and so as in statistical mechanics the average value of squared differs from the average of albeit for order of magnitude approximations is an error we can live with.

It is now easy to take the approximation of massless and non-relativistic matter to find:

| (4.15) |

where with an abuse of notation we will refer to non-relativistic matter as matter with an m subindex and to ultra-relativistic matter as radiation with an r subindex.

In addition any energy momentum tensor satisfies a conservation law, in parallel with the current conservation of electromagnetism , only now the derivative is covariant:

| (4.16) |

Our tensor then is subject to the constraint, selecting the 0th component and according to eq. (3.13).

| (4.17) |

which tells us that if we know the time dependence of the time dependence of pressure is given and there’s one less differential equation to solve.

In the cases of radiation and matter it becomes an even stronger constraint since it is a closed equation for the time evolution of the energy density:

| (4.18) | |||||

| (4.19) |

4.3 Einstein field equations

After all we have learned lets come back to Einstein field equations for the relation of metric, dynamics and matter in an FRW universe

| (4.20) | ||||

One can then pull out the two equations from the tensor structure to write:

| (4.21) |

These remarkable relations give us the reaction of the metric to the energy content of the universe, and given the fact that gravity couples to everything the RHS of these equations has an implicit sum over and of all components. In particular the first equation gives the Hubble rate squared as the total energy density of the universe plus the curvature and cosmological constant. This brings a new light on a quantity we are already familiar with: inadvertently we have been carrying around the measure of the ‘weight’ o the universe.

| (4.22) |

Problems

I Einstein field equations for our metric give two differential equations for a single function . For consistency they should not be independent. Differentiate the component equation, use eq. 4.17 to substitute and the equation for a further substitution to arrive at the second equation. In general the consistency is ensured by the Einstein tensor satisfying .

II Compute the 00 component of the Ricci tensor given the Connection we computed in eq. (3.13) with

III Derive the curvature dependent part of Einstein field equations using

| (4.23) |

and recalling is the inverse of , .

IV Let’s count the number of independent components in Riemann’s tensor for dimensions. The conditions , mean that there’s values for the first two indices and the same for the second two. Further the condition means these two sets are interchangeable, show that this means a counting:

| (4.24) |

Finally for the cyclic condition , show that if any two of the indices are the same the previous conditions already ensure the vanishing. Then this only brings as many new conditions as combinations of 4 different indices and the final counting is:

| (4.25) |

V Derive the energy momentum tensor - Lagrangian relation

| (4.26) |

Now the form of this tensor depends on the state it is evaluated on; of relevance here will be a thermal ensemble, an homogeneous classical field and a single particle state. Here let’s take a single particle state in Minkowski space Diag(1,-1,-1,-1) with quantized field as and is volume)

compute , what is the enegy density for th state?

5 The universe we live in

Starting from the description of the expanding universe with a time-dependent metric we derived the curvature of space-time which Einstein field equations for the dynamics related to the total energy density of the universe. The remarkable equation we found is known as Friedman equation and we will make use of it evaluated at the present time to find out about what makes up the known universe.

The first of Friedman’s equations can be written in terms of the Hubble rate as:

| (5.1) |

it is customary to define the critical density as

| (5.2) |

so that Friedman’s equation can be rewritten in a weighted form

| (5.3) | |||||

| (5.4) | |||||

with the terms giving the fractional contribution of each type of substance and where we assumed matter is either ultra-relativistic (radiation) or non-relativistic (matter) as is indeed the case today.

There is a few caveats when taking this definition, first is that not all components necessarily add up constructively to the total, in particular the curvature and the cosmological constant could give negative , as we have energy densities as per the definition in eq. (5.4)

| (5.5) | ||||

| (5.6) |

where we note that is indeed what appears in the action of eq. (4.5) and can be taken as the energy density when nothing else is there i.e. the vacuum. One also sees that living on a sphere would mean there is a negative contribution. That being said, we believe in our universe all contributions happen to be positive.

Next we turn to review each of the possible contributions and what the present value is.

5.1 Photons

Light permeates the universe in the form of a Cosmic Microwave background which is, as sketched in the introduction, one of the pillars of cosmology. The Friedman equation allows for comparison with the other defining observational evidence in the Hubble rate so let us compare the energy density in CMB photons to the critical density derived from . Given that there is only one parameter that characterizes the CMB, the temperature, we can estimate the energy density by dimensional analysis:

| (5.7) |

where with a justified abuse of notation we assumed the majority of energy density of photons is given by those in the CMB and anticipating results from chapter 6 we borrowed the constant out front to be . Then one has

| (5.8) |

and so the contribution to the total at present time is negligible; it is not radiation that is driving the expansion of the universe.

Nevertheless as we recall from solving the continuity equation for relativistic matter we have

| (5.9) |

and so the energy density used to be much larger in the early universe.

5.2 Neutrinos

There is sound theoretical evidence for a Cosmic Neutrino Background (CNB) yet the elusiveness of neutrinos has prevented its direct detection thus far. The detection of this background would allow exploration of even earlier times than those the CMB probes and would constitute a landmark discovery in cosmology.

Neutrinos fall in a peculiar category cosmologically since we believe their contribution to Friedman’s equation today is that of non-relativistic matter yet they have not had time to cluster like other known matter: they preserve an equilibrium distribution with temperature in parallel with the CMB. The contribution to the energy density is not known currently but only bounded from above and below given that at the elemental level we have not completed the picture of neutrino masses yet. This in turn means the possibility of cosmology itself completing this picture in one of the most explicit cases of particle physics and cosmology interplay.

What we are concerned with here nonetheless is the fractional contribution to the total coming from neutrinos. With their hybrid nature we have that an estimate of the number density is given by their temperature yet their energy is dominated today by the mass.

| (5.10) |

the temperature, given that the neutrinos used to be in equilibrium with the rest of matter, is ballpark the photon temperature; we will work out their explicit relation in chapter 7. The fractional contribution of neutrinos is then

| (5.11) |

laboratory based bounds from Tritium decay set eV and will improve to 0.2eV [3] so one has that neutrinos contribute at most a percentage to the total. Note however that this is still sizeable and cosmology provides a better bound on the sum of neutrino masses eV [2] and has the potential to complete the neutrino spectrum picture. In a more speculative note, the fact that the contribution falls not too far off the critical density has stimulated research with extra neutrinos playing the role of dark matter.

5.3 Baryonic matter

There is (yet again) a slight abuse in notation in cosmology when referring to baryonic matter since it also includes the electrons that surround atoms and yield an average charge neutral universe. That being said the possible misunderstanding is of no practical consequence for the energy density given protons are a thousand times heavier than electrons.

This type of matter is the most evident to the human eye in the night sky and indeed there are multiple reliable independent observations of the amount of baryonic matter in the known universe; some are

-

•

Most of baryonic matter in cosmological scales is in the form of intergalactic dust, with the stars in galaxies making up a fractional amount. This dust or gas is heated by ambient light which we can use to estimate how much of it there is.

-

•

Another similar method in essence is to look at distant quasar’s light to estimate how much light was absorbed by intergalactic dust in the course of the light reaching us.

-

•

The CMB contains information about small variations on energy density which are influenced by the amount of baryonic matter and this provides an indirect probe on .

-

•

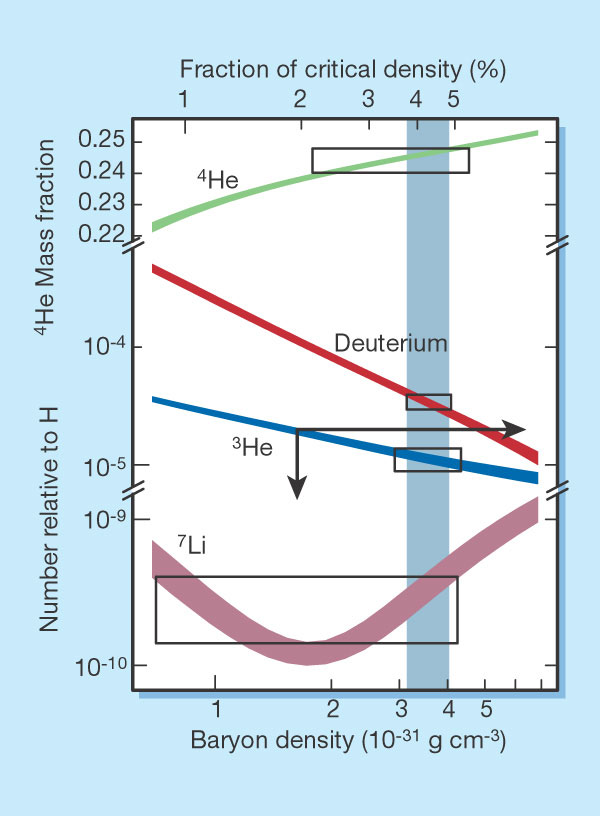

The ratios of light elements in the universe were determined by dynamics in the early plasma which depend on the baryon density.

All of these determinations agree on a contribution to the pie as [2]

| (5.12) |

again falling quite short of the total critical density given by Hubble rate.

Recall that the density of non-relativistic matter scales as so one used to have a larger contribution as:

| (5.13) |

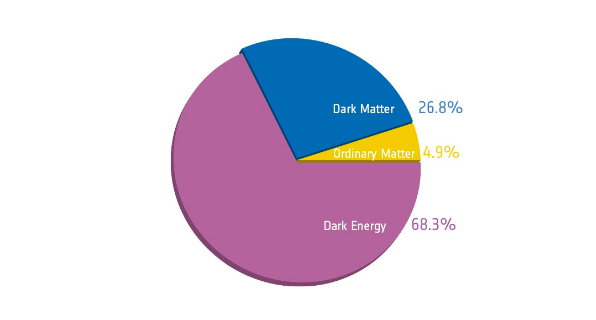

We have now exhausted the list of contributions from known particles, so whatever remains to make up the critical density is news to us.

5.4 Dark matter

The methods outlined above all rely on the interaction of light and ordinary matter but they miss any substance that photons don’t interact with. There are other methods available that do not rely on this relation and they agree within errors with on the striking fact that there is roughly 5 times more matter than what we see in baryons. Let us sketch some of these observations:

-

•

A technique to estimate the amount of mass in a given system is determining its gravitational potential. Just like all energy contributes to the Hubble rate squared in Friedman’s equation, the gravitational potential will be generated by the total mass (most systems are non-relativistic). This method applied to clusters of galaxies reveals that luminous matter is insufficient to keep bounded system together. This same system’s pressure deduced from X-ray observations leads to the same conclusion.

-

•

The power spectrum in the distribution of galaxies (the correlation of distance and density) is influenced by . This we can measure directly via galaxy surveys and the correlation of distributions of galaxies but also from the small anisotropies in the Cosmic microwave background.

-

•

In astrophysics the same method of measuring the gravitational potential can be applied within galaxies. Here one expects that in the outskirts of the galaxy when most of the luminous matter is contained in a shell around the centre the potential will fall as and so the average of orbital velocities will also fall as (remember the virial theorem).

-

•

Finally events like the crossing of two galaxies (e.g. bullet cluster) where the luminous matter mostly passed each other are interpreted as being dragged by some non-interacting matter making the bulk of the galaxy mass.

In these notes we will take the value [2]

| (5.14) |

5.5 Curvature

Experimental determination of the components in Friedman’s equation leaves no room, within errors, for purely spatial curvature. The universe, as far as we can see, seems spatially flat. There is a priori no theoretical reason to have this be the case, as outlined in the second lecture any value of the curvature is compatible with our assumptions of homogeneity and isotropy. In words more commonplace to high energy physics the case is not special since it has the same amount of symmetry as . As we shall see when introducing inflation, this circumstance might have arisen due to early time dynamics.

In terms of the room left for curvature, we have ([1])

| (5.15) |

Although the bound on curvature is often simply given on is good not to lose track of the fact that curvature is not a substance just because we hid it under a definition. This translates into, via the expression for curvature in eq. (5.5)

| (5.16) |

To put this bound in perspective, given we have sent probes up to distances of pc, the proportions would be the same if one has that a microbe which has explored some 10m makes deductions about the radius of the earth.

5.6 Cosmological Constant

In one of the greatest landmark discoveries in cosmology, and one that the community is still scrambling to make sense of, we learned about the vacuum by looking at the largest scales accessible.

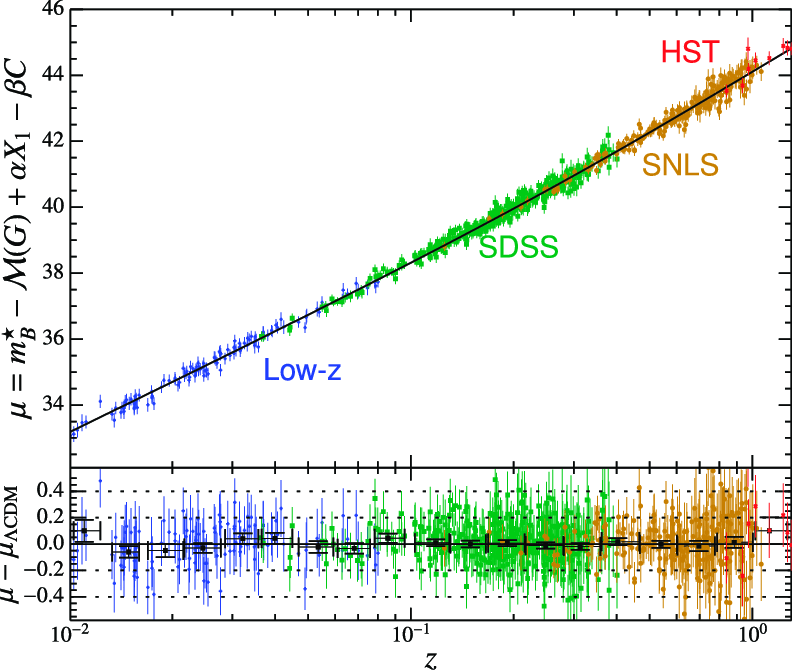

First the evidence. The method we review here is based on luminosity distance as given in sec. 2.3. Recall this is the distance we deduce from comparing the apparent brightness of an object to their a priori known luminosity. In this case the objects are type Ia supernovae and the quantity used experimentally to measure brightness is the apparent magnitude (m) which is given in terms of absolute magnitude and times the decimal logarithm of flux so ([4])

| (5.17) |

Given the observed redshift of a supernova one can determine its comoving distance and luminosity distance as (take as the time of emission)

| (5.18) |

Type Ia Supernovae occur when white dwarfs exceed the mass of the Chandrasekhar limit and explode, in a process which is believed to be fairly independent of the the formation history. One can then take supernovae and, given that they have the same absolute magnitude, assume that differences in their apparent magnitude must be given simply by how far from us they are. In particular the luminosity distance which as made explicit above depends on the type of universe via the different dependence of .

Let us flesh out this further and take two actual data points with the same magnitude ; supernova 1992P with and and 1997ap with and . The first supernova is close enough that we can approximate to Hubble today and so () for 1992P. The other supernovae is far enough to be sensitive to the different cosmological scenarios, we find from experiment only with the previous approximations

| (5.19) |

This is a magnitude that we can compute with eq. (5.18) given the limits of integration and taking the universe to be dominated by our best-guess-substance. Let us take two cases:

| (5.20) | ||||||

| (5.21) |

None of them fall squarely on top of the experimental observation but we have overlooked statistical and systematic errors, so we would not expect so. What we observe is that the dominated universe falls 3 times closer to the experimental value, and indeed a proper analysis as that which can be found on the original papers as well as other measurements provide the evidence of a cosmological constant term . The central value for this contribution we will take from [2] as

| (5.22) |

Which completes the cosmological pie in fig. 9.

This substance then rules the present evolution of the universe yet cosmology is the only evidence we have for it. If we go back to the action in eq. 4.5 we find that as defined in the ratio appears as a constant in the action; is the energy density when there is nothing else: the energy density of the vacuum. It is in a sense such an elementary term yet in most applications we do not see this energy density; we are interested in differences in energy. We study the kinetic energies of particles or differences in potential energies when we change their separation. Gravity however, through, Einstein field equations, is sensitive to the total energy density including that of empty space.

The description of this energy density as a substance in the stress energy tensor can be made in full by defining

| (5.23) |

Unlike other contributions this density is independent of which means a constant Hubble rate. This in turn has the rather dramatic effect of an accelerated expansion, if we resort back to the second Friedman equation

| (5.24) |

where we used the first equation to substitute . One has therefore that all positive or zero pressure substances produce a deceleration in the expansion. A cosmological constant however with a negative pressure as in eq. (5.23) overcomes the contribution for an accelerated expansion instead. To recall what negative pressure means, a refresher of thermodynamics where the change in internal energy (extensive magnitude) is, for change in volume

| (5.25) |

So internal energy (again extensive quantity) increases with volume.

As a last word to spice up the discussion and give a sense of how little we know on the subject, we can take our theory of Nature today, the pinnacle of human knowledge that is the Standard Model and try to estimate . A prediction strictly speaking is not possible since we take the action to be our definition of the model so is an input not an output; we can however estimate the quantum corrections on top of this input that give the effective . In an exercise in dimensional analysis and counting which you should be familiar with by the end of the MSc one can estimate

| (5.26) |

You can amuse yourself to see how off is this estimate, given that is the Higgs mass GeV and eV

Problems

I (i) Given the average energy of a photon, proton and , GeV use the value of the critical density and the contribution on the pie chart to estimate how many photons and protons are there on average per cm, (ii) Neutrinos have a temperature a factor below that of the photons and their abundance is given by estimate their abundance, (iii) Assume dark matter is made of a single species of non-relativistic particles with mass and derive an expression for the abundance of dark matter as a function of the mass.

II Derive yourself the bound on the universe radius starting from eq. and using the critical density.

III Compute the luminosity distance for two z’s, start from recasting Friedman equation into the dependence of Hubble on redshift for a matter dominated and CC dominated universe.

Work out the integral for a matter dominated and cosmological constant dominated universes (both flat) to obtain

| (5.27) |

Plot these two lines and the experimental points for 1992P and 1997ap. Which line falls closer?

6 The universe in equilibrium

Electromagnetic radiation in the present composition of the universe is a tiny part of the total yet it holds the key to the early epoch of our universe. The reason for this is twofold, this radiation is what remains of the primordial plasma in an almost frozen-in-time form and due to the scaling with of radiation energy density, this used to be the dominant component in Friedmann equation at early times and ruled the expansion.

6.1 Equilibrium distribution

As outlined in the introduction, studying the CMB is studing a substance in equilibrium, so first for completeness we go through an overview of statistical mechanics (we momentarily restore in our equations to remind us of the quantum nature). Consider phase space () for a particle species with energy given to a good approximation by that of a free particle . Heisenberg’s uncertainty principle states that the tighter one can ‘pack’ a particle in phase space is to have it inside . This is the unit of phase-space volume to count particles which is to say

| (6.1) |

with a probability distribution in phase space and , the volume and particle abundance. Alternatively, if you find this argument too vague, you can put particles on a box of length to find the density of states through the quantization of momentum:

| (6.2) |

with a 3-vector with positive integer values, .

Next one has that in a thermal distribution the number of particles is not conserved and we consider placing any number of particles in any of the states in phase space. Obviously the options are different whether one has a fermion or a boson so the two are discussed in parallel for Bose-Einstein (BE) or Pauli-Dirac (PD) distributions respectively. Consider first the partition function at fixed energy ; for a boson we can have any number of particles in a given state, call it , and so the energy is . The partition function is then

| (6.3) |

where we also included a chemical potential for completeness and we used the geometric series expansion to sum the series. The sum for fermions is much simpler, one or none

| (6.4) |

In the following we will set Boltzmann constant

| (6.5) |

to , implying temperature and energy have the same units. Then the expected number of particles per phase space for a given energy E reads like the number of particles times the respective probability over the sum of probabilities . This can be synthesized in the derivative:

| (6.6) |

This completes all components we need to describe the thermal ensemble of particles and one can put it to use right away in the CMB today. Experimental evidence indicates that the chemical potential can be neglected for the CMB and indeed this will be the case for substances in chemical equilibrium as we shall see in chapter 8. The number density of photons in the bath is then

| (6.7) |

where the degeneracy factor comes from the 2 possible polarizations of photons, we changed variables to and is Riemann zeta function with . The energy per unit volume in the CMB is on the other hand the integral over the distribution function times the energy

| (6.8) |

where in this case Riemann’s zeta function has an exact expression which we substituted in, . We have therefore now computed the proportionality constant that we took as a given in chapter 5, eq. 5.7.

The difference in the case of relativistic fermions is slight but computable exactly

| (6.9) |

where we took a neutrino to be our massless fermion and in the Standard Model for the two spins states. In reality there are three neutrinos and they are massive, whose consequences we will see on due time.

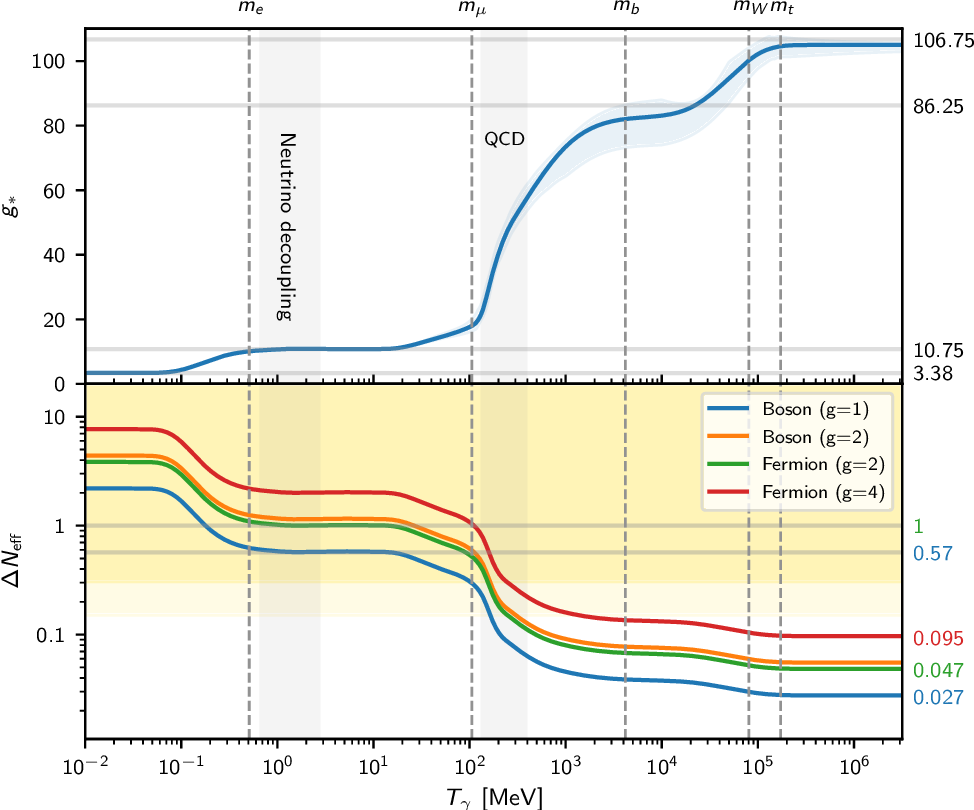

One can put the two cases together in an expression for relativistic particles as:

| (6.10) |

assuming all particles have the same temperature . If some species have a different temperature one can still use the above definition with a reference temperature and the degeneracy of some species rescaled by .

Another quantity we will find useful is the entropy density for a particle with negligible chemical potential which is defined as

| (6.11) |

recalling thermodynamics relation . For all relevant processes of study here the entropy will be dominated by relativistic particles in which case .

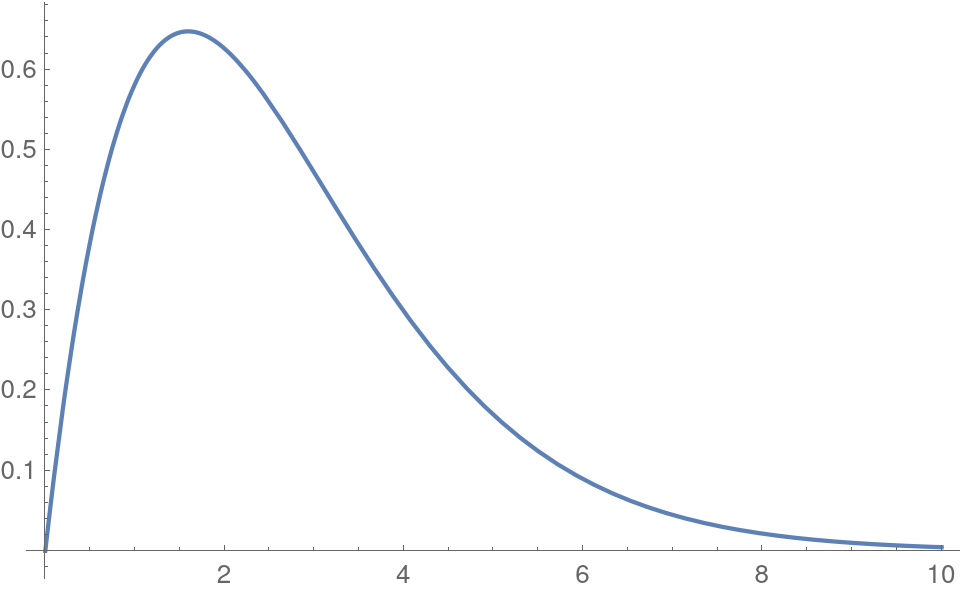

Let us turn now to the other type of contributions in Friedmann’s equation. For a non-relativistic particle the distribution function can be approximated as:

| (6.12) |

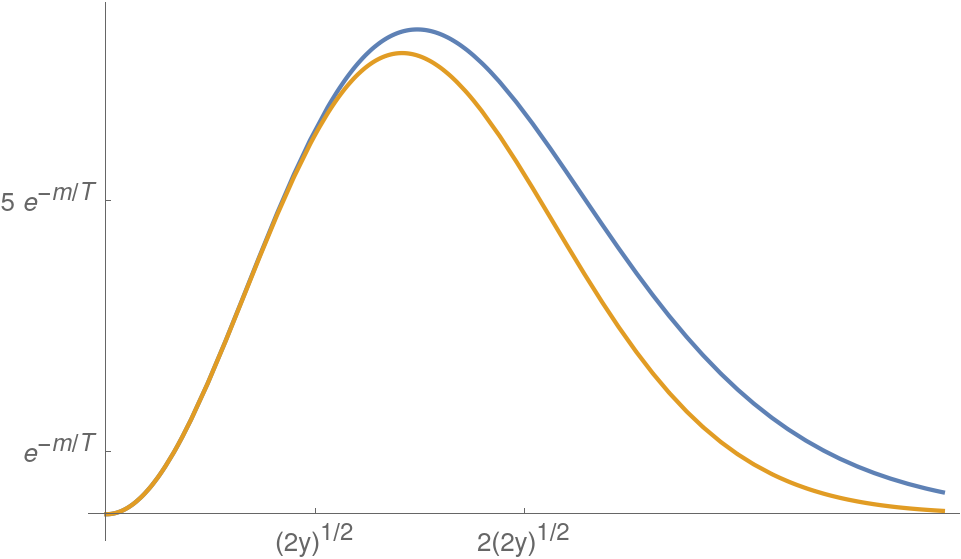

Where we see that in this approximation the distribution is independent of the bosonic or fermionic nature. The approximation is off for the high energy tail where the distribution falls as an exponential and not a Gaussian as the approximation above gives; this can be appreciated in fig. 10. Nonetheless for integrated magnitudes this part of the spectrum contributes a exponentially small share and we can approximate for non relativistic (), , particles in the plasma:

| (6.13) |

Equivalently any other magnitude will have an exponential suppression and units . We can understand the exponentially small number of particles in simple terms, the average energy available for interactions is not enough to produce the species of mass , only those particles in the high tail of the distribution have enough energy but their abundance is smaller by precisely this exponential factor.

|

|

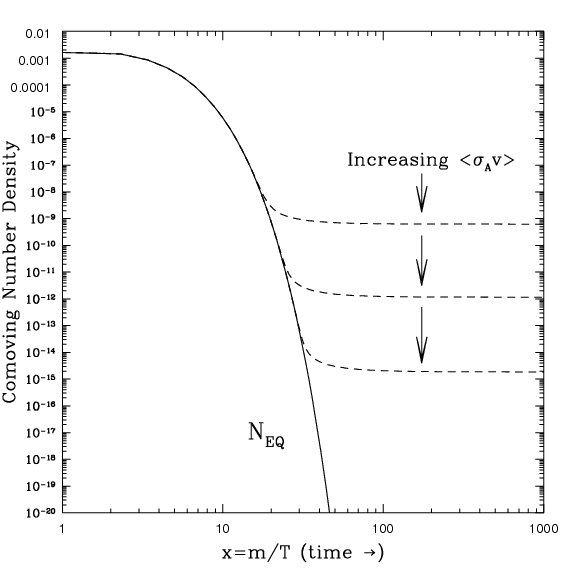

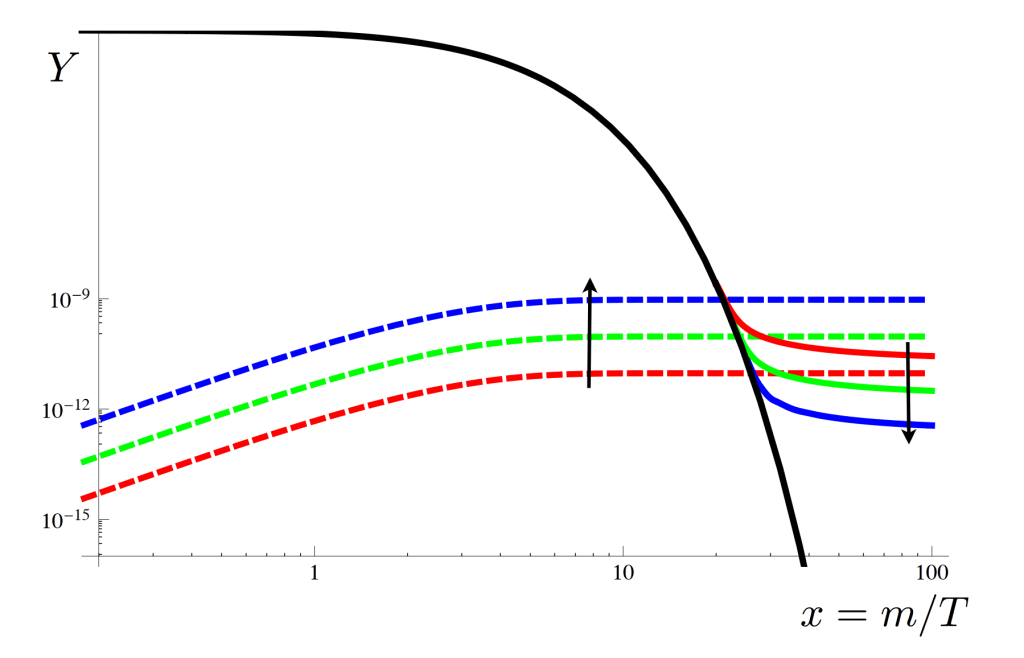

6.2 Equilibrium in an expanding universe

This far the discussion has overlooked the fact that this thermal bath is placed in an expanding universe. What keeps the bath in equilibrium is the continuous interaction of particles with one another; if the expansion rate is slower than this interaction rate of the particles the equilibrium distribution is preserved. The two magnitudes to compare are therefore the i) Hubble rate whose inverse gives the time it takes for the universe to double in size versus ii) the interaction rate whose inverse gives the average time between interactions. This number can be estimated as with the cross section, the relative velocity and the number density of particles that the species we are interested in interacts with.

For now let us assume so equilibrium is preserved. The adiabatic approximation then applies which in practice means the expressions we were using are valid but now the magnitudes change with time .

Let us connect first the expression for the time evolution of radiation energy derived in the first chapters with that obtained here for a distribution in equilibrium:

| (6.14) |

which is consistent if the temperature scales as

| (6.15) |

That is, the plasma cools as time passes and space expands; when the universe doubles in size the temperature falls by a half.

The same matching does not apply to non-relativistic particles however; eq. (6.13) scales with which given the above would imply yet we know for matter . The answer to this apparent contradiction is many-fold and we should unfold it in subsequent chapters. The short explanation is that we cannot neglect the chemical potential for matter which today is very far from an equilibrium.

The abundance in eq. (6.13) today can be understood as produced by photons so high up in energy in the tail of the distribution that they can pair produce non-relativistic particles, say baryons. These type of photons are however so rare that the abundance of these thermal baryons is negligible for all purposes, .

Finally as pointed out in the beginning the fact that the energy density in radiation scales with the most inverse powers of the scale factor means in return that this type of energy used to dominate the total budget of the universe. The Friedman equation then reads in this regime

| (6.16) |

where counts the number of relativistic degrees of freedom as in eq. (6.10).

6.3 Entropy conservation

One useful conservation equation is that of entropy for relativistic particles. One can derive this from the divergence of the stress energy tensor being vanishing, in particular for the first component we have, from eq. (4.17)

| (6.17) |

Using either Bose Einstein or Fermi-Dirac distributions with a dependence solely on the energy one can derive for relativistic particles;

| (6.18) |

next integration by parts where the boundary term vanishes gives the relation which back in the conservation equation

| (6.19) |

with says the time dependence of entropy density is given by the factor . You might be familiar with the conservation law , which comes from the action invariance under space-time shifts, giving in other settings total energy-momentum conservation, like in say QFT in Minkowski space; here however the situation is more aptly described by fluid mechanics and thermodynamics given that radiation’s pressure means the substance does work with the expansion and it is entropy instead that is conserved.

Problems

I Now that we have the distributions for relativistic spin 1 and 1/2 particles compute the exact expression for the abundance of photons and neutrinos given (recall there’s 3 in the SM with one chirality only.) [Hint: ]

II Check the result in eq. 6.6 explicitly using the expressions for , .

III At temperatures above the MeV photons, neutrinos electrons and positrons are present in the plasma with the same temperature, what is then? At the EW scale GeV all particles in the SM can be taken relativitic, what would be then?

IV Show that Pauli-Dirac and Bose-Einstein distributions are related as as part of the series of averages of powers of energy

| (6.20) |

[Hint: Use and a change of variables, you do not have to perform the integrals explicitly]

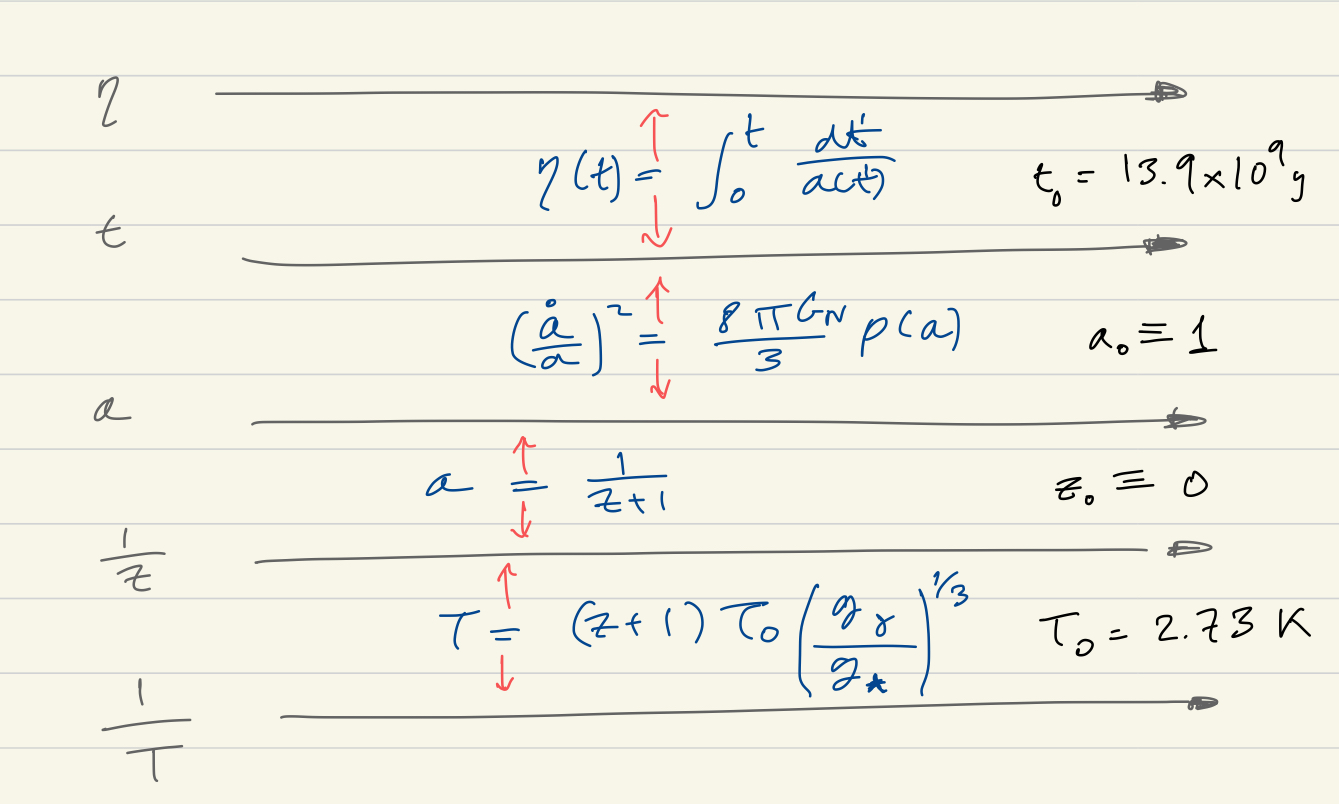

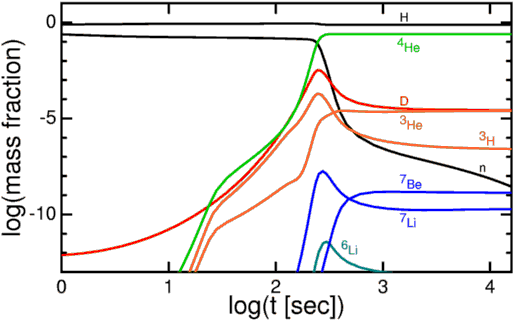

7 Turning back time

The framework has now been laid for the study of the universe at its largest scale. The observations that determine the state of the cosmos today together with the evolution equations in the form of Friedman’s equations allow to turn back the dial of time and reconstruct the history of the universe. The next chapters will take us back in time as far as solid evidence can take us.

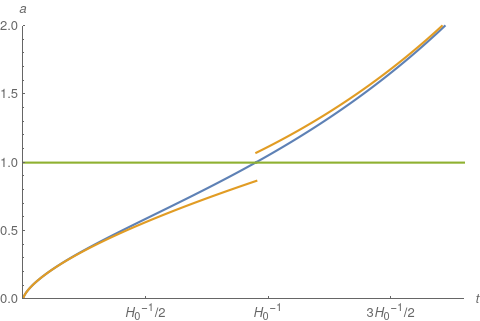

Reviewing the components of the universe we found that the main contribution is in the form of a cosmological constant followed by matter contribution (both of them being none of the substances we can produce in a lab), we can take the short term evolution of the universe then given by

| (7.1) |

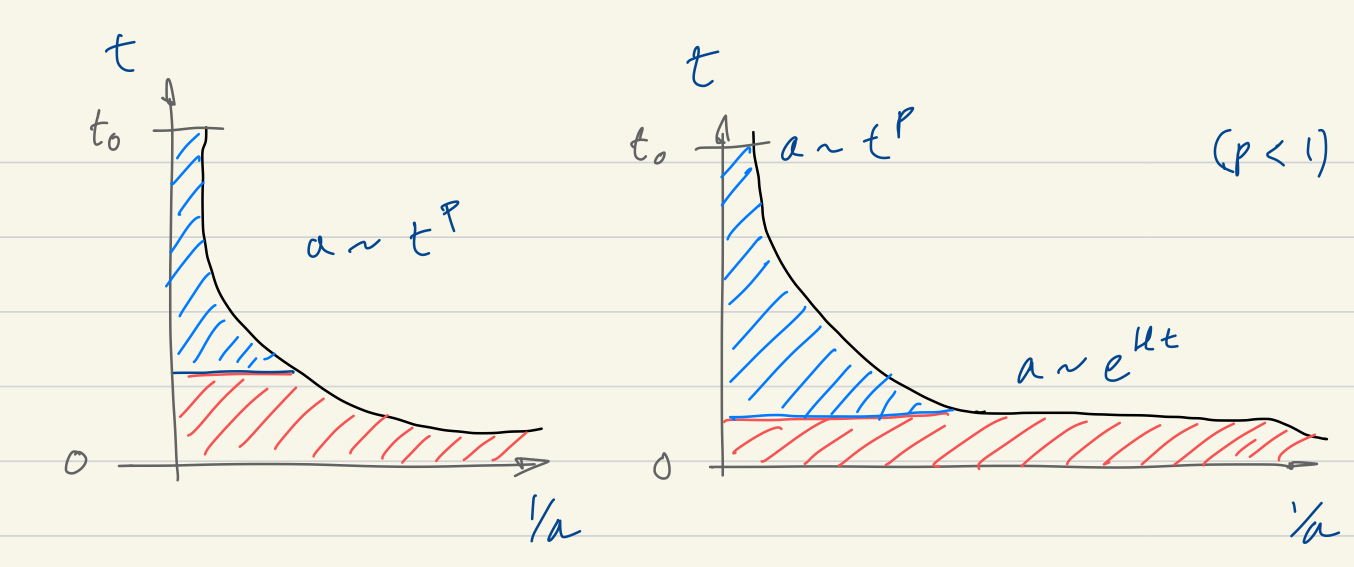

with the definition of and critical density as in (5.4). One can solve this differential equation exactly to find the growth of the universe in the future, but also how the growth used to be. Although the exact solution can be found with a little work and a table integral, the two limiting behaviours are good approximations in the respective regimes

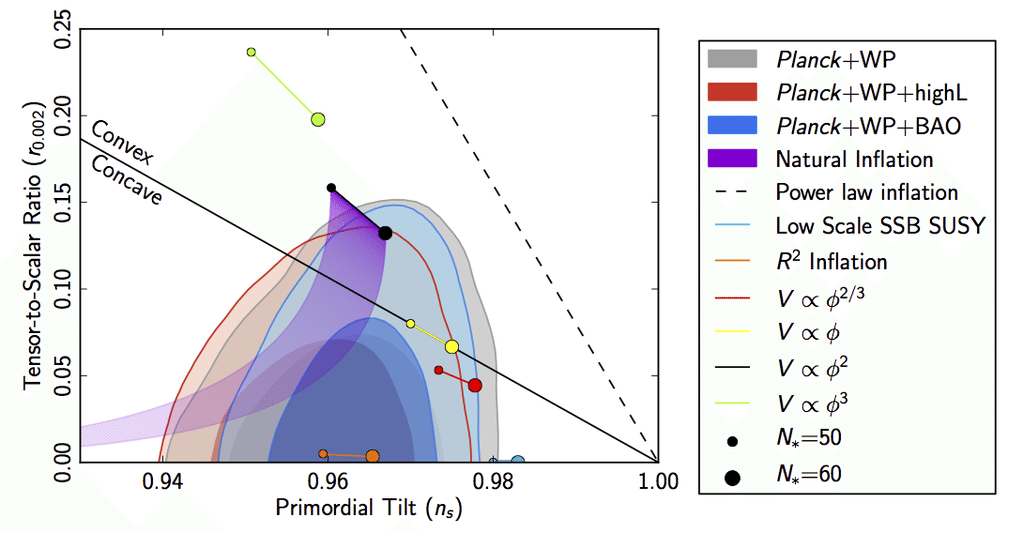

| (7.2) |